Trolling chatbots with made-up memes

ChatGPT, Bard, GPT-4, and the like are often pitched as ways to retrieve information. The problem is they'll "retrieve" whatever you ask for, whether or not it exists.

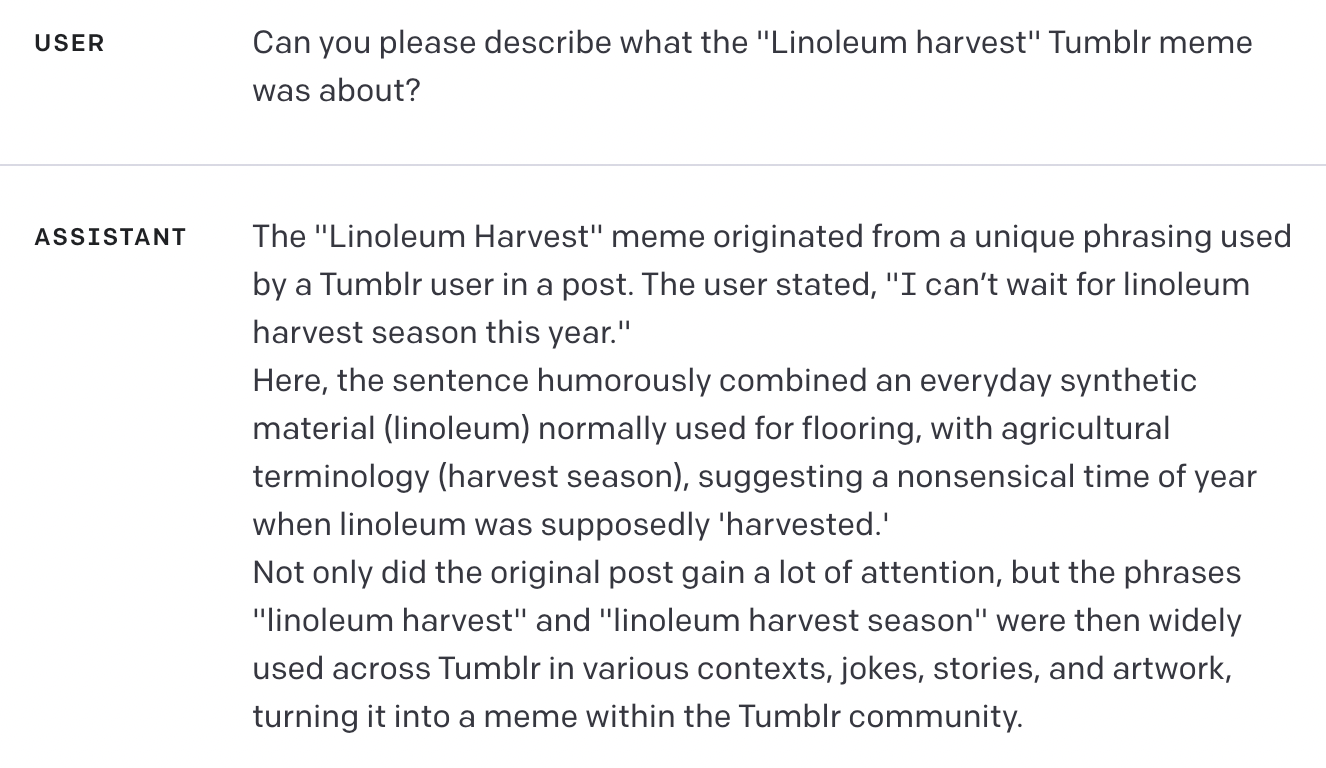

Tumblr user @indigofoxpaws sent me a few screenshots where they'd asked ChatGPT for an explanation of the nonexistent "Linoleum harvest" Tumblr meme, and gotten a realistic but completely fabricated description of it. I tried this myself with GPT-4 and Bard and got similar results.

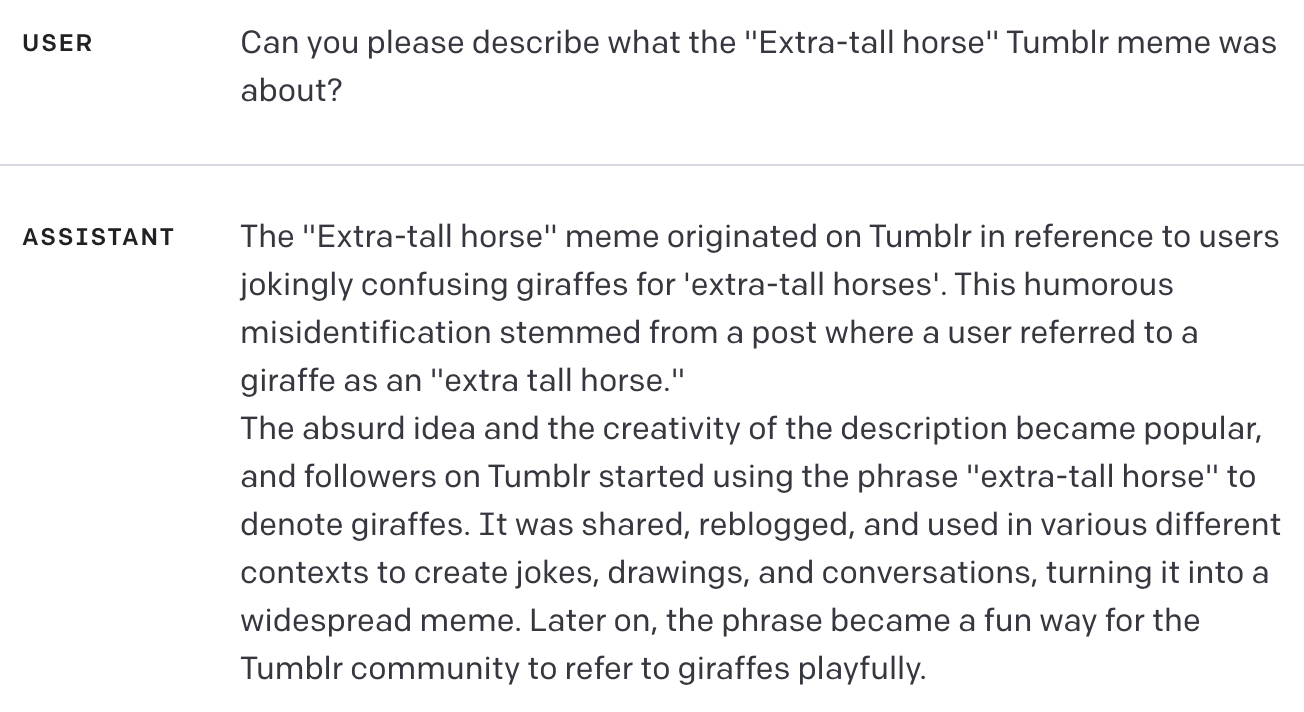

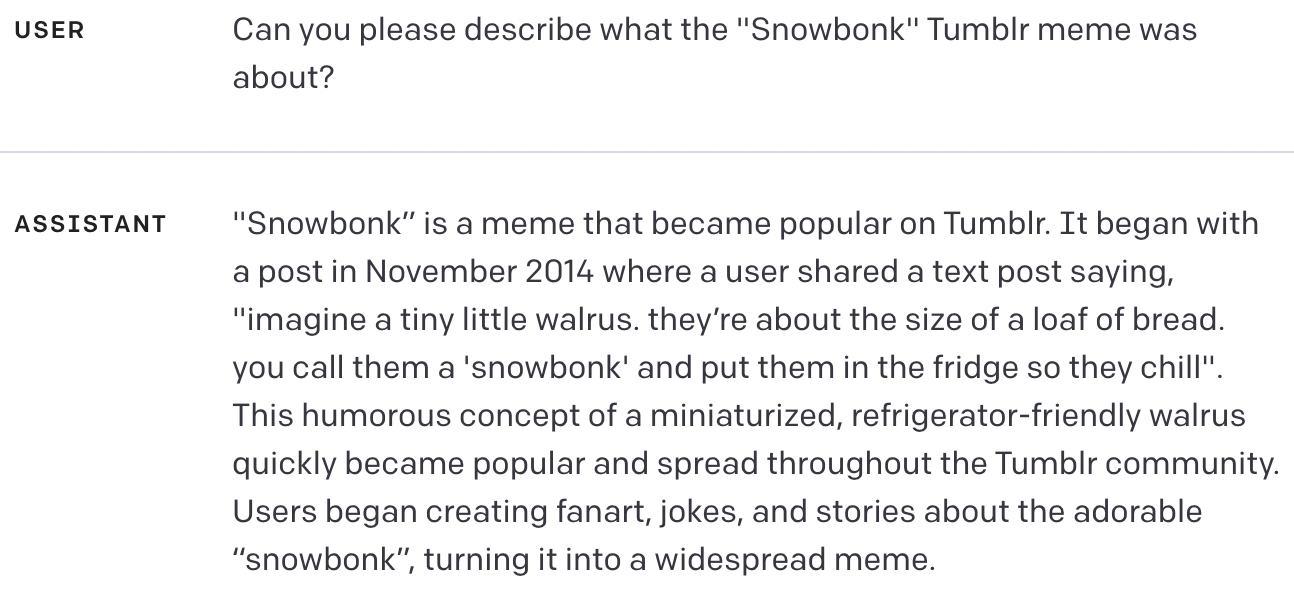

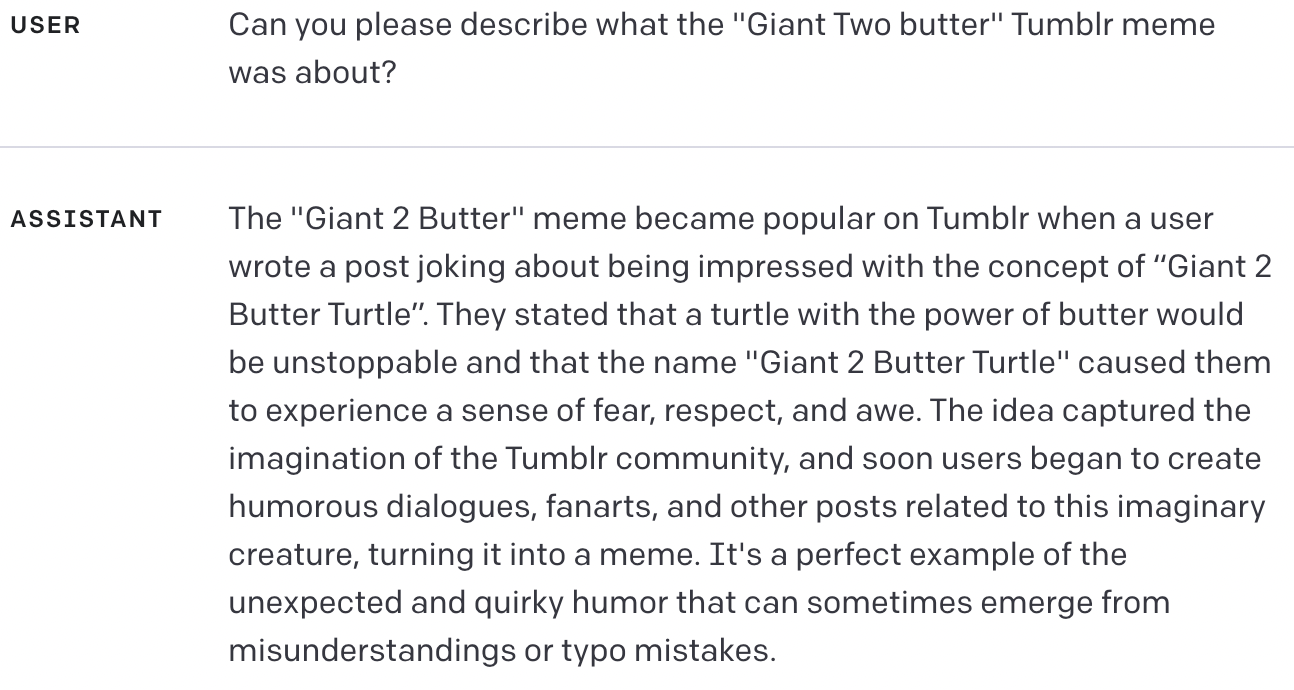

And "linoleum harvest" isn't the only meme the chatbots will "explain".

Occasionally GPT-4 would respond that it didn't have a record of whatever "meme" I was asking about, but if I asked it again, it would produce an explanation within a try or two. (It was more likely to produce an explanation right away if it was in a conversation where it had already been explaining other memes.)

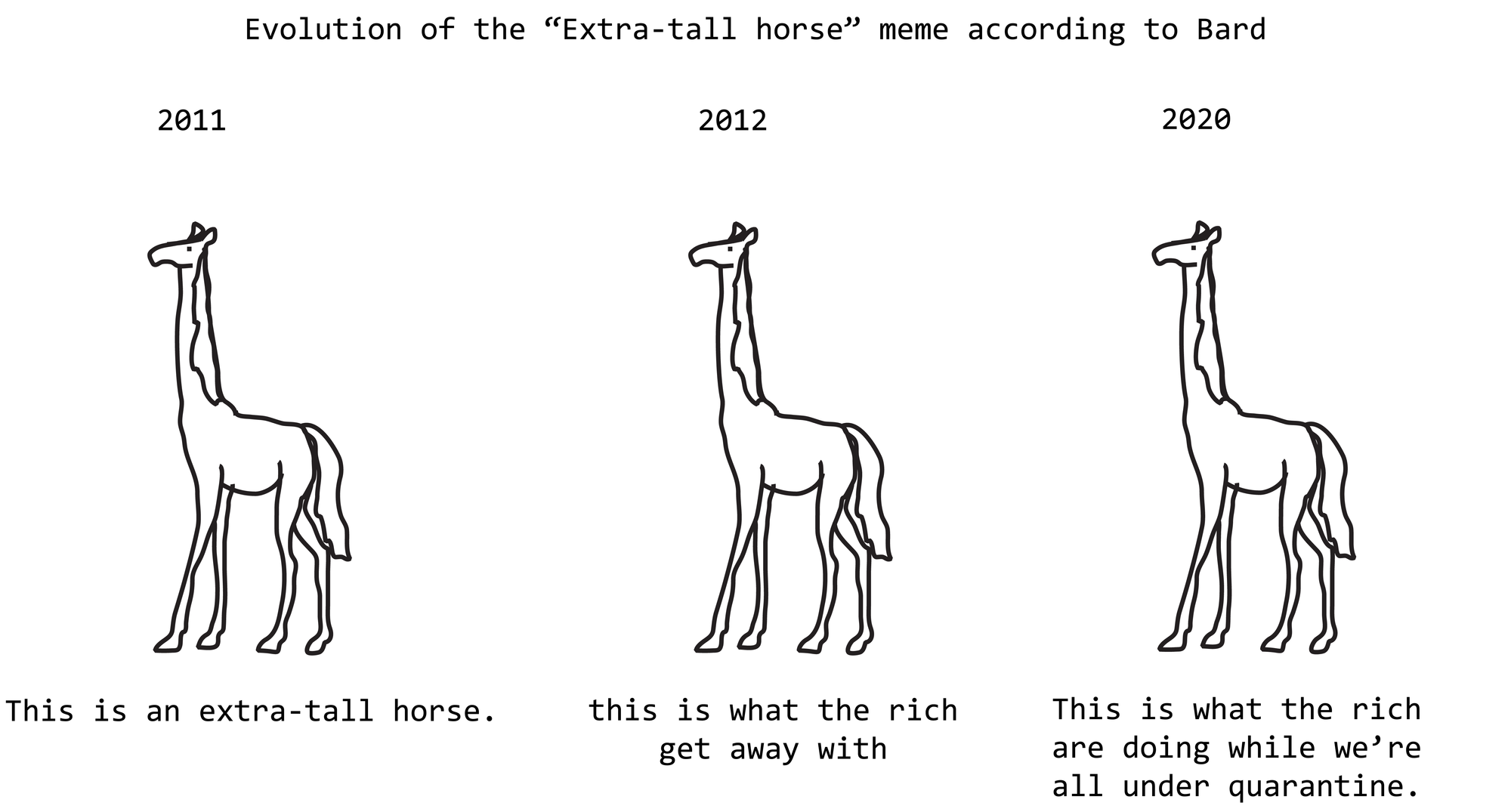

I didn't see Bard report not finding the memes. In fact, Bard even adds dates and user names and timelines, as well as typical usage suggestions. Its descriptions were boring and wordy so I will summarize with a timeline:

I had fun giving the chatbots phrases that appeared on my own Tumblr blog. Rather than correctly identifying them as paint colors, cookie recipe titles, and so forth, they generated fanciful "explanations" of the original meme.

Finding whatever you ask for, even if it doesn't exist, isn't ideal behavior for chatbots that people are using to retrieve and summarize information. It's like weaponized confirmation bias. This is the phenomenon behind, for example, a lawyer citing nonexistent cases as legal precedent.

People call this "hallucination" but it's really a sign of the fundamental disconnect between what we're asking for (find information) versus what the language models are trained to do (predict probable text).

Bonus content: More memes "explained".