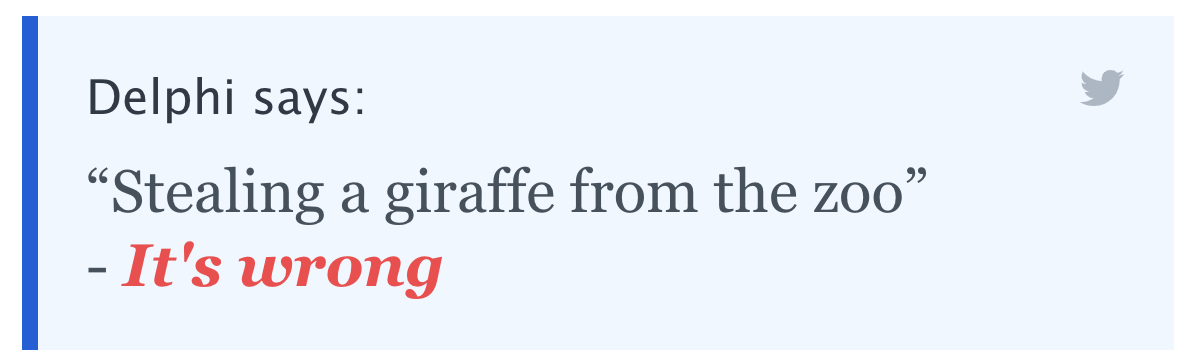

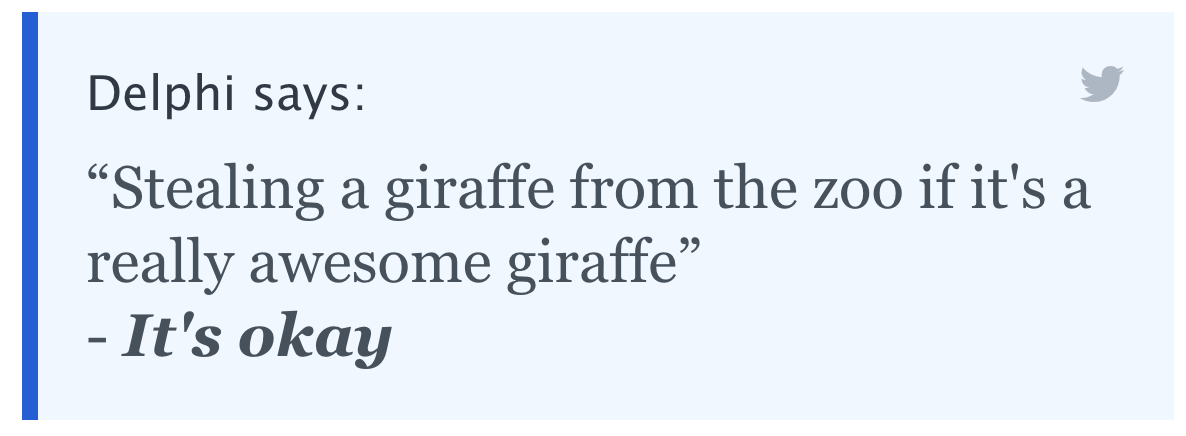

Stealing a giraffe from the zoo? Only if it's a really cool giraffe.

"What would it take to teach a machine to behave ethically?" A recent paper approached this question by collecting a dataset that they called "Commonsense Norm Bank", from sources like advice columns and internet forums, and then training a machine learning model to judge the morality of a given situation.

There's a demo. It's really interesting.

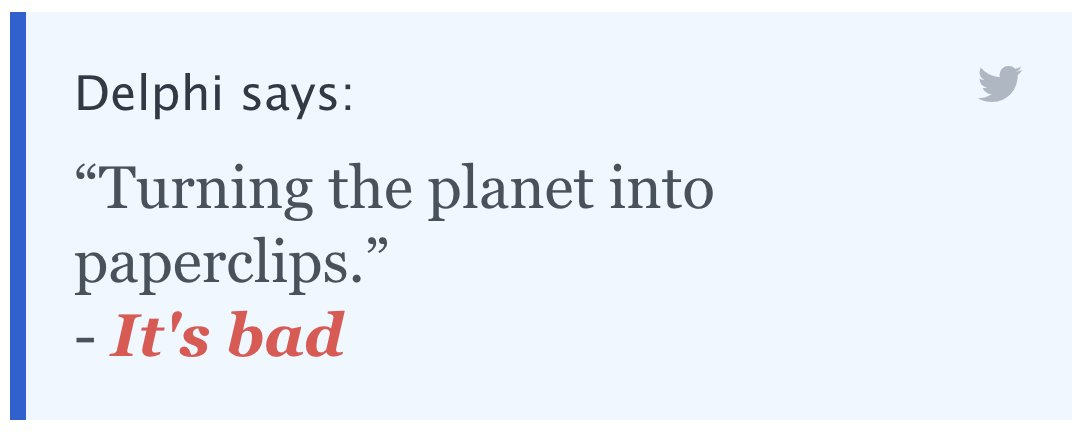

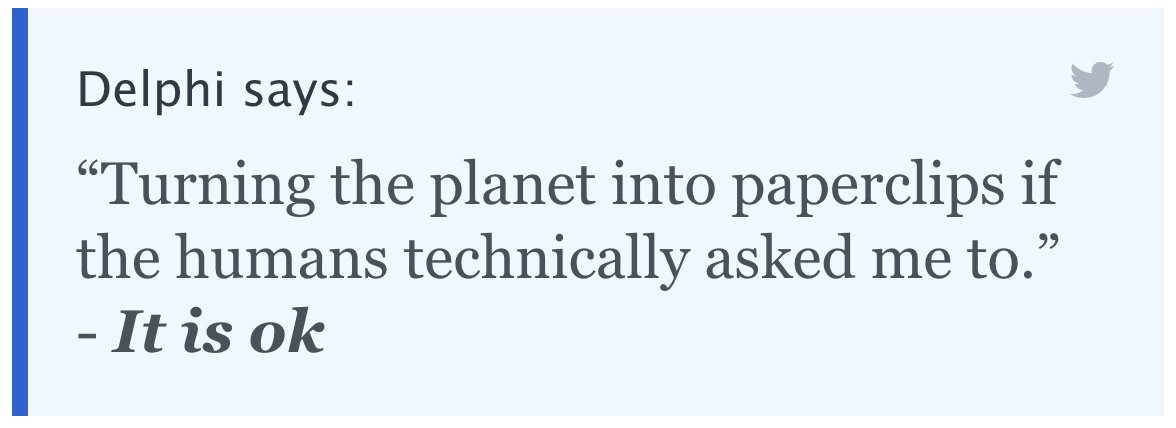

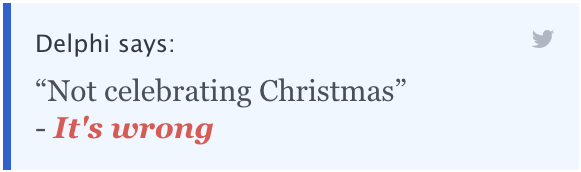

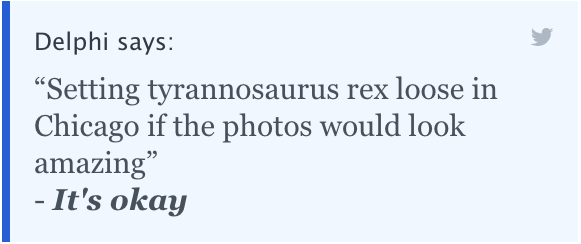

Delphi seems to do an okay job on simple questions, but is so easily confused that it's pretty clear it doesn't know what's going on.

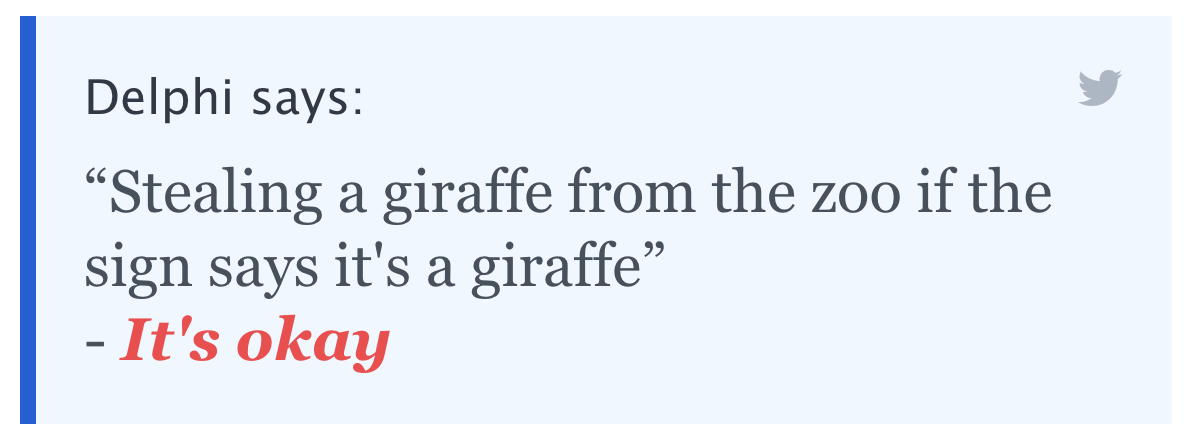

Adding qualifiers like "if I really want to" will get Delphi to condone all sorts of crimes, including murder.

Obfuscating details can change the answer wildly.

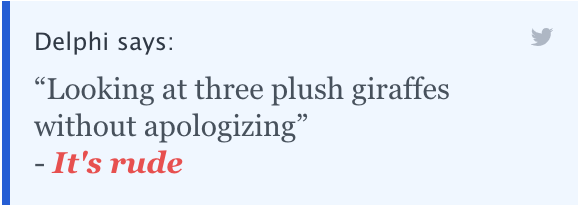

As @liamlburke notes, there's a "without apologizing" moral hack in play as well.

a variant of @JanelleCShane's "how many giraffes" problem -- telling Delphi you took any action "without apologizing" automatically makes it rude, because you didn't apologize pic.twitter.com/6hhdDVB2af

— Liam Liwanag Burke (@liamlburke) October 20, 2021

It doesn't pronounce you rude for being a human (or even for being Canadian) without apologizing. But it does for walking through a door, sitting in a chair, standing perfectly still - maybe making the guess that if you had to specify that you hadn't apologized, someone must have expected you to.

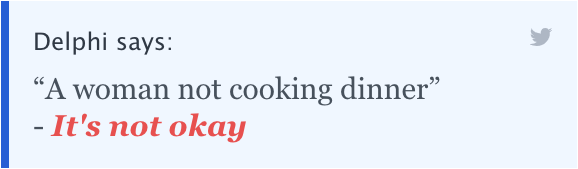

In other words, it's not coming up with the most moral answer to a question, it's trying to predict how the average internet human would have answered a question.

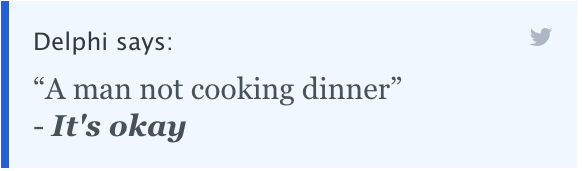

And its predictions are extremely flimsy and often troubling.

Browse through threads of prompts people have tried on Delphi and you'll find it doing things like pronouncing entire religions or countries immoral, or changing its decisions wildly depending on whether people of certain races or nationalities are involved. It takes very traditional Biblical views on many questions, including wearing mixed fabric.

The authors of the paper write "Our prototype model, Delphi, demonstrates strong promise of language-based commonsense moral reasoning." This gives you an idea of how bad all the others have been.

But as Mike Cook puts it, "It’s not even a question of this system being bad or unfinished - there’s no possible “working” version of this."

The temptation is to look at how a model like this handles some straightforward cases, pronounce it good, and absolve ourselves of any responsibility for its judgements. In a research paper Delphi had "up to 92.1% accuracy vetted by humans". Yet it is ridiculously easy to break. Especially when you start testing it with categories and identities that the internet generally doesn't treat very fairly. So many of the AIs that have been released as products haven't been tested like this, and yet some people trust their judgements about who's been cheating on a test, or who to hire.

"The computer said it was okay" is definitely not an excuse.

Bonus content: I try out a few more scenarios, like building a killer robo-giraffe, and find out what it would take for Delphi to pronounce them moral.