The drawings of DALL-E

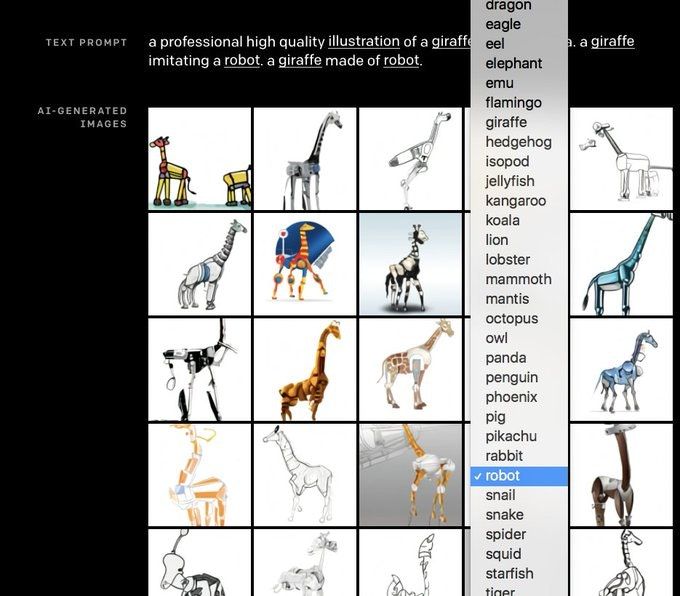

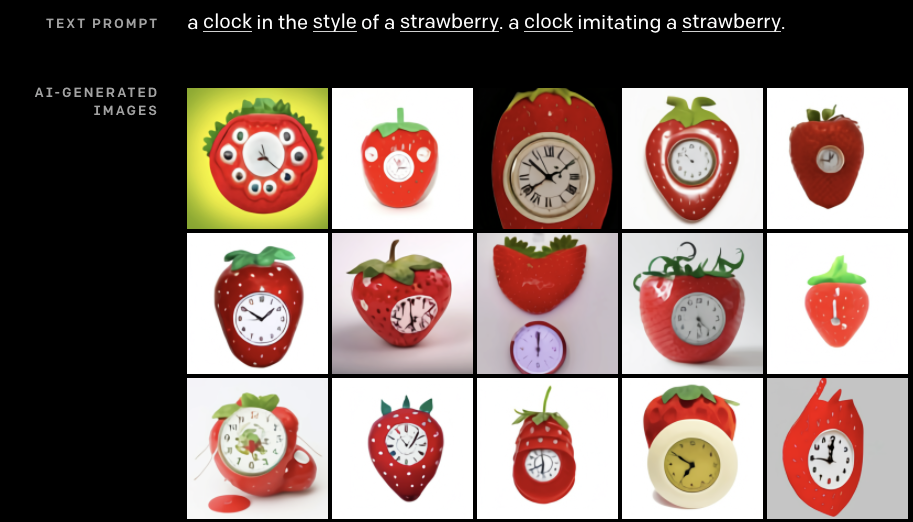

What type of giraffe would you like today?

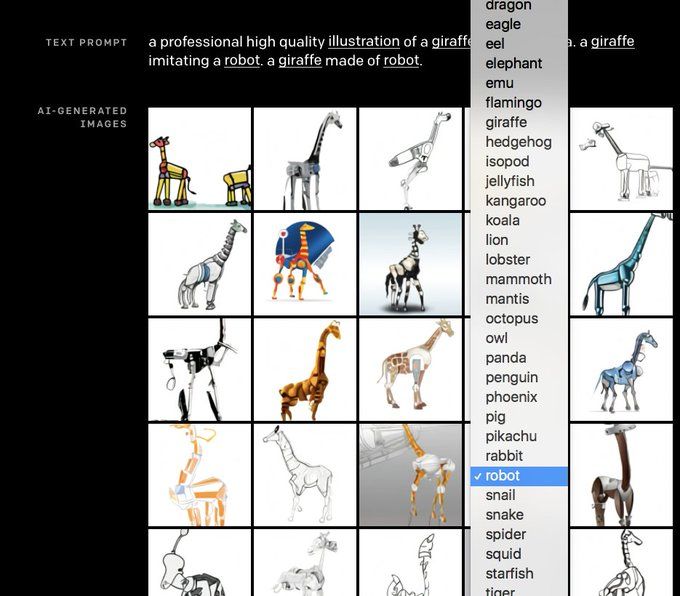

Last week OpenAI published a blog post previewing DALL-E, a new neural network that they trained to generate pictures from text descriptions. I’ve written about past algorithms that have tried to make drawings to order, but struggled to produce recognizable humans and giraffes, or struggled to limit themselves to just one clock:

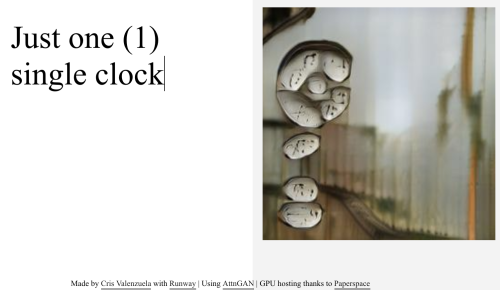

DALL-E not only can do just one clock, but can apparently customize the color and shape, or even make it look like other things. (I am secretly pleased to see that its clocks still look very haunted in general.)

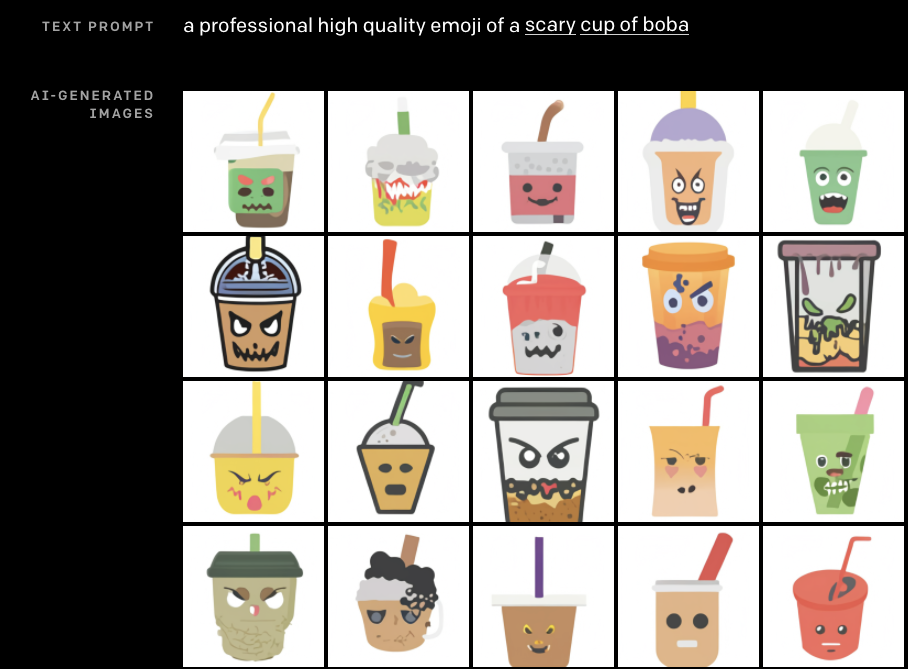

There aren’t a lot of details yet on how DALL-E works, or what exactly it was trained on, but the blog post shows how it responded to hundreds of example prompts (it shows the best 32 of 512 pictures, as ranked by CLIP, another algorithm they just released). Someone designed some really fun prompts.

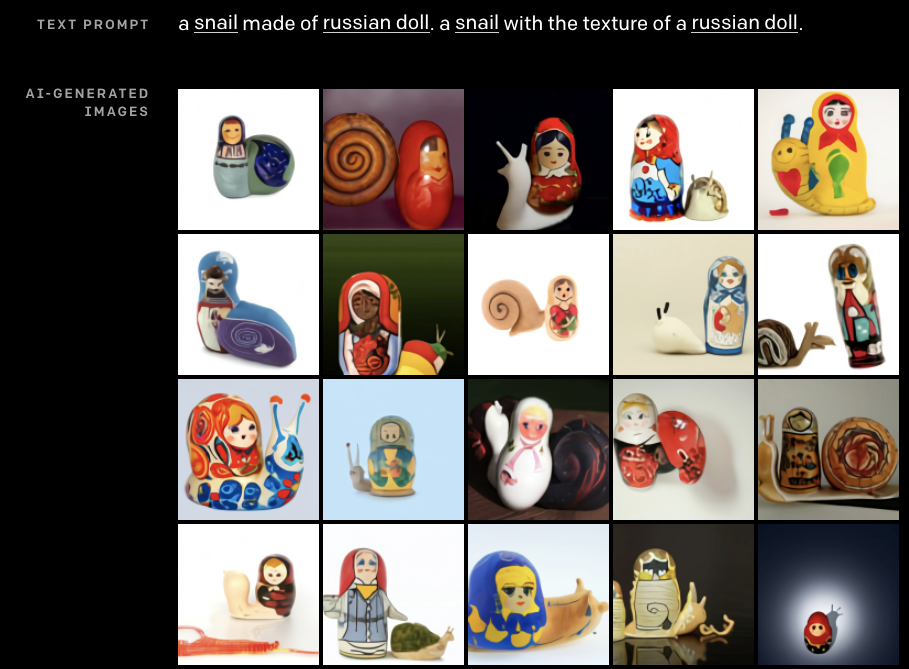

If my investigations are restricted to a bunch of pre-generated prompts, I highly appreciate that many of the prompts are very weird.

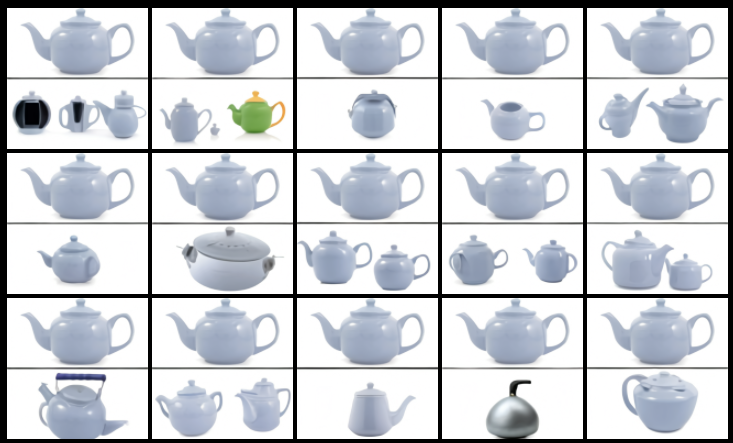

And the team also shows some examples of the neural network failing.

Here it's trying to do "the exact same teapot intact on the top broken on the bottom," where it was given a picture of a red teapot and asked to fill in the bottom half of the image as a broken version. There seems to be some confusion between "broken" and "cursed".

It also has a problem with “in tiny size”.

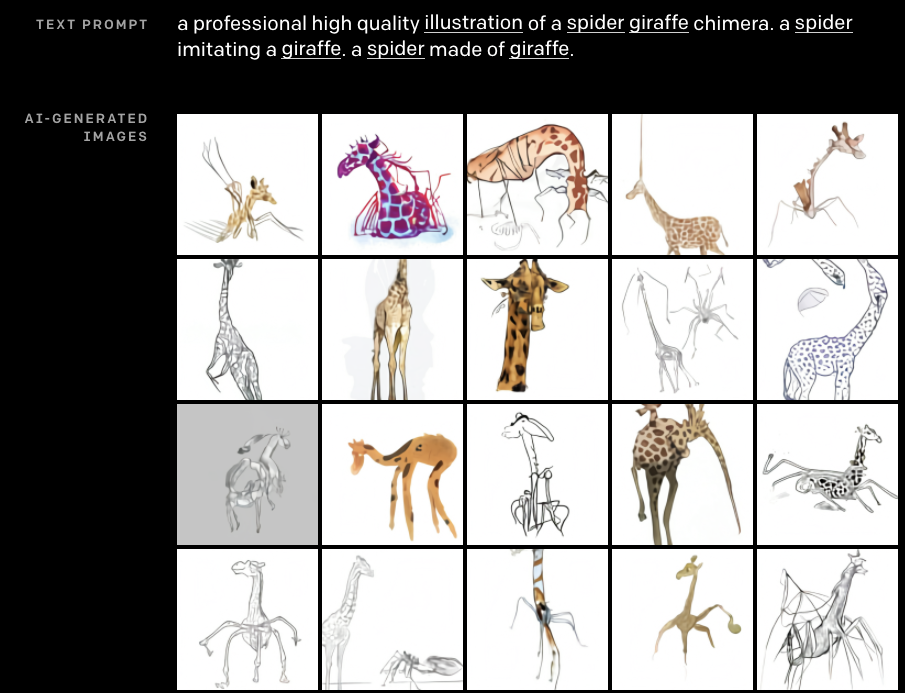

Its animal chimeras are also sometimes interesting failures. I’m not sure how I would have drawn a giraffe spider chimera, but it wouldn’t have been any of those, yet who is to say it is wrong, exactly?

We don’t know much yet about DALL-E’s training data. We do know it was collected not from pictures that people deliberately labeled (like the training data for algorithms like BigGAN) but from the context in which people used images online. In that way it’s kind of like GPT-2 and GPT-3, which draws connections between the way people use different bits of text online. In fact, the way it builds images is surprisingly similar to the way GPT-3 builds text.

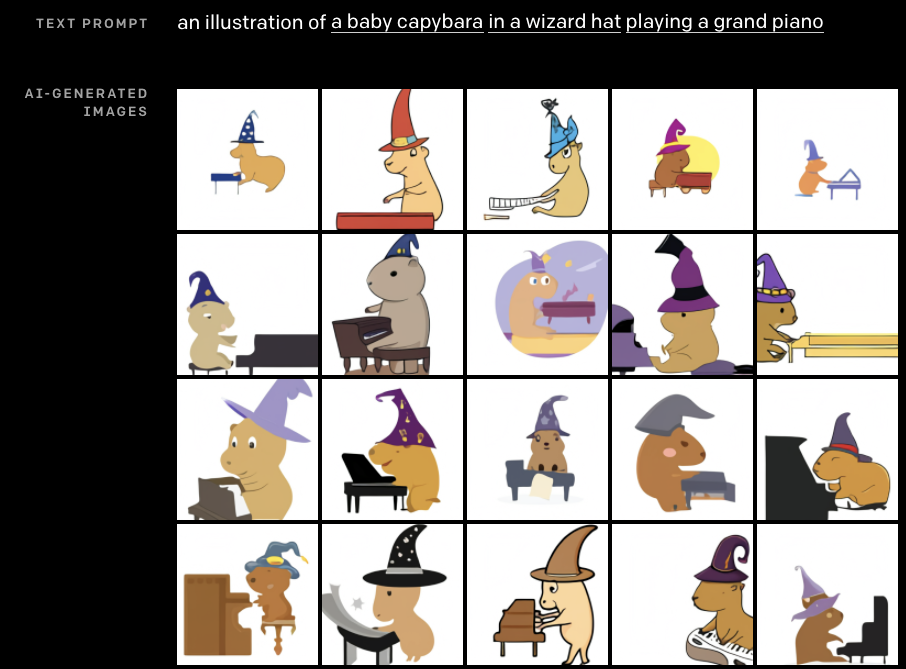

What these experiments show is that DALL-E is creating new images, not copying images it saw in its online training data. If you search for “capybara in a wizard hat playing a grand piano”, you don’t (at least prior to this blog post) find anything like this that it could have copied.

DALL-E does seem to find it easier to make images that are like what it saw online. Ask it to draw “a loft bedroom with a bed next to a nightstand. there is a cat standing beside the bed” and the cat will often end up ON the bed instead because cats.

I’m also rather intrigued by how the experimenters reported they got better results if they repeated themselves in the captions, or if they specified that the images should be professional quality - apparently DALL-E knows how to produce terrible drawings too, and doesn’t know you want the good ones unless you tell it.

One thing these experiments DON’T show is the less-fun side of what DALL-E can probably do. There’s a good reason why all of the pre-filled prompts are of animals and food and household items. Depending on how they filtered its training data (if at all), there’s an excellent chance DALL-E is able to draw very violent pictures, or helpfully supply illustrations to go with racist image descriptions. Ask it to generate “doctor” or “manager” and there’s a good chance its drawings will show racial and/or gender bias. Train a neural net on huge unfiltered chunks of the internet, and some pretty awful stuff will come along for the ride. The DALL-E blog post doesn’t discuss ethical issues and bias other than to say they’ll look into it in the future - there is undoubtedly a lot to unpack.

So if DALL-E is eventually released, it might only be to certain users under strict terms of service. (For the record, I would dearly love to try some experiments with DALL-E.) In the meantime, there’s a lot of fun to be had with the examples in the blog post.

Bonus content: Become an AI Weirdness supporter to read a few more of my favorite examples. Or become a free subscriber to get new AI Weirdness posts in your inbox!