Trained a neural net on my cat and regret everything

Recently I had my first-ever experiment with training a neural net to generate images, when I trained StyleGAN2 to generate screenshots from The Great British Bakeoff. Although recognizable as the baking show, it was a highly distorted, nightmarish version - it would have helped had the neural net been able to keep track of how many faces humans have.

The problem was that the task was too hard. StyleGAN2 has good success when it only has to do human faces from the front, but when its task is broader, like generating internet cats, it struggles hilariously. So I decided to give it a more restricted cat dataset: just one cat. My cat. In more or less the same pose.

After a couple of data-collection attempts that were sabotaged by Char’s tendency to put her nose in the camera, I managed to get a full two minutes of video of her sitting still, staring intently at something invisible in the corner of the kitchen. Probably ghosts. It doesn’t matter. The point is, I got the video.

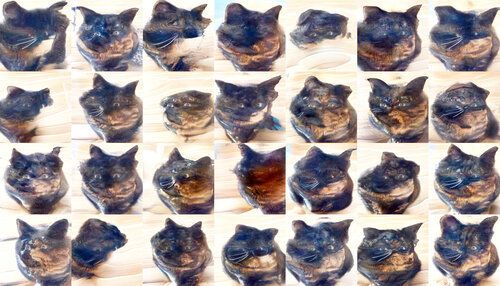

I extracted 3,934 frames from the video and used runwayml to train StyleGAN2 on them for 3000 iterations. Training took about 5 hours, and by the end, it was producing this:

Now, they aren’t perfect, but they are much much better than the Bakeoff images. The cat spent the most time looking off to the right, so the neural net learned that pose the best.

But my word, to GET to this point the model had to travel through terrible places. You see, I started with a version of StyleGAN2 that had been pretrained on human faces, which saved a lot of time since the AI already knew how to do edges and textures and hair. But it means that the process of switching gears from generating humans to generating cats yielded a stage that looked like…. this.

This is one of the most horrible things I have ever seen, and I saw CATS (2019) in the theater.

Here’s a video of the full training process.

What is particularly alarming, perhaps, is the PATH it took from human to cat. Rather than transforming human eyes, nose, and mouth into the feline equivalents, it erases all the human features and then forms the cat features out of featureless fur. Here’s a snapshot of the training process in which you might be able to see how the cat/people have four eyeballs, or no eyeballs. (I adjusted the contrast to make these more visible, as these steps got really dark for some reason, as if to shield human eyes from what was happening).

What is great/horrifying about this is that if you have a computer, an internet connection, and a two-minute video of something, you can do this too. Got a cat? A bunch of Easter eggs? A lemon? It’s now seriously easy to generate your own horrible abominations. The simpler and more consistent your subject, the more recognizable your results will be.

AI Weirdness supporters get bonus content: more amazing (?) cat pictures. Or become a free subscriber to get AI Weirdness posts in your inbox!

My book on AI is out, and, you can now get it any of these several ways! Amazon - Barnes & Noble - Indiebound - Tattered Cover - Powell’s - Boulder Bookstore (has signed copies!)