Nonexistent Easter Eggs

I’ve been experimenting with image-generating neural networks, which look at a bunch of images and via trial and error gradually learn to produce more like them. Or at least “like them” according to the neural net’s own interpretation of realistic, which is usually missing a lot.

Last time I used runwayml to finetune Nvidia’s StyleGAN2 neural net on Great British Bakeoff screenshots. This turned it from a neural net that produced fairly-convincing human faces to one that produced horrible abstract versions of the baking show, with smears of bread and flesh and bunting everywhere. Its results were such a mess because it was trying to do so much - it couldn’t handle all the variety in the baking show.

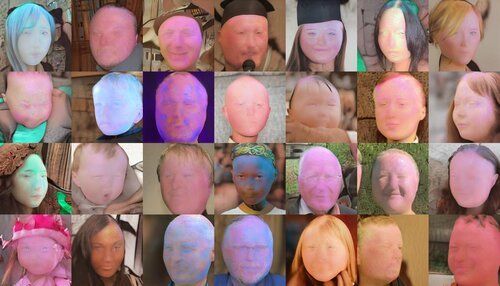

So, this time I decided to try training StyleGAN2 again, but this time with a much much simpler set of images. I dyed 30 easter eggs, set them on a wooden floor, and then panned over them with my phone’s camera. I extracted 1,928 frames from the resulting video, started training StyleGAN2, and after 3000 iterations it was producing these:

These are at least recognizably eggs, even if they look like they have somehow caused the universe to warp around them and are just barely managing to linger within this dimension.

It’s worth remembering that I didn’t give the neural net any instructions on what eggs were or what were the allowable colors and shapes. It just had to figure out why the faces it was generating at first were unacceptable, and what should change about them to make them more egglike. Unsurprisingly, the intermediate stages were somewhat startling.

It’s interesting to see what the neural net failed at. I had included several speckled eggs in the training data, yet none of the eggs turned out speckled. It did attempt to do a couple of the striped eggs, but they came out with weird color gradients that I have no idea how you’d accomplish in real life. If you look closely, you can see that some are strongly textured to the point of being oddly furry. Based on their underlying math, image-generating neural nets tend to be better at small-scale texture than at big stuff.

Some of the neural net’s eggs were nothing like the eggs I had dyed. Pleasingly pearlescent, they had multiple layers of colors showing through, and an often plasterlike texture. There’s an apparent depth to them, as if they were cloud layers on gas giants. Or maybe the blush on a fruit. These are mistakes, but strangely appealing ones. I wonder what kind of animal would hatch from these.

It is seriously easy to try this yourself - you don’t need a fancy computer, or any coding skills. Got several hundred pictures of something? Use runwayml.com to generate your own monstrosities.

AI Weirdness supporters get bonus content: more amazing eggs! Or become a free subscriber to get AI Weirdness posts in your inbox.

My book on AI is out, and, you can now get it any of these several ways! Amazon - Barnes & Noble - Indiebound - Tattered Cover - Powell’s - Boulder Bookstore (has signed copies!)