This is not cozy: AI attempts the Great British Bakeoff

I’m a big fan of comforting TV, and one of my go-tos is the Great British Bakeoff. It’s the cheerful clarinet-filled soundtrack, the low-stakes baking-centric tension, and the general good-natured kindness of the bakers to one another.

What better way to spread cheer and baked deliciousness than to train an algorithm to generate more images in the style of a beloved baking show?

I trained a neural net on 55,000 GBBO screenshots and the results, it turns out, were less than comforting.

What went terribly, terribly wrong?

This project was doomed from the beginning, despite using a state-of-the-art image-generating neural net called StyleGAN2. NVIDIA researchers trained StyleGAN2 on 70,000 images of human faces, and StyleGAN2 is very good at human faces - but only when that’s ALL it has to do. As we will see, when it had to do faces AND bodies AND tents AND cakes AND hands AND random squirrels, it struggled, um, noticeably.

Here are some of StyleGAN2’s human faces. They’re not all 100% convincing (and it’s best not to look at the ones at the edges of the images), but not a terrible starting point for a baking show algorithm that’s going to be doing lots of human faces. From here, I used RunwayML’s impressively easy-to-use interface to finetune StyleGAN2 on GBBO screenshots.

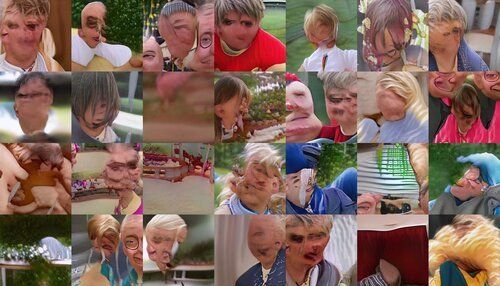

Did it take this knowledge of human faces and apply it to generating baking show humans? No, it did not. Almost the first thing it did was ERASE ALL THE FACES.

Many more iterations later, it has begun to generate humans again, but is nowhere near the performance it once had. I tried training it for longer, but progress had slowed to a halt. This is the usual outcome when you train a neural network for a long time - not an acceleration of progress but a gradual stagnation. If your training dataset was too small, the neural net will memorize your training data, failing to produce anything new. Or with larger datasets, it may even become unstable, its outputs looking more and more garish and abstract, or turning into samey white glue. See that stripey scene near the lower right? High-contrast stripes like that might be a sign of that kind of trouble, a condition we call mode collapse. If I kept training for longer, there’s a chance that more and more of these images might end up stripey like that.

So the baking show images were too varied for the neural net, and that’s why its progress stopped, even with lots of training data. But why did the neural net fail to use its prior expert knowledge of human faces? It may be that its ability to do faces is very dependent on where the face is. If you go back and look at that original set of nicely centered faces that StyleGAN2 made, you’ll notice that when it tries to do faces at image edges, they look a bit of a mess. “Humans with their faces uniformly centered” is mostly within the grasp of today’s state-of-the-art neural nets; “Humans shifted around a bit” is a smidge too difficult.

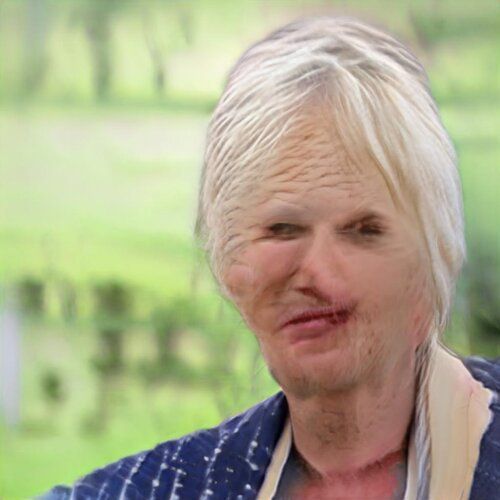

What is the neural net good at? It’s best at patterns. In the rather distressing image below, note how much more effectively the neural net managed distant trees and support columns and even union jack bunting - all repeating patterns. Even where the neural net ill-advisedly decides to fill the entire tent interior with bread (or possibly with fingers; it’s sometimes unsettlingly hard to tell), you can see that the patterns in the bread repeat.

Human faces and bodies, on the other hand, aren’t made of repeating patterns, no matter how much the neural net may want to make them that way.

In fact, excessive repeating patterns is one of the hallmarks of neural net-generated images. Even when the repetitiveness is more subtle, it still tends to be there, and it’s one of the ways you can detect AI-generated images. At its most basic, a neural net usually builds images by stacking lots of repeating features on top of one another, finetuning the balance between them to produce objects and textures. If it gets the balance slightly wrong, individual repeating features tend to pop out. Once you start looking for the repetition, you’ll see it everywhere.

Given that it’s supposed to be doing a baking show, does the neural net produce actual baked goods? The answer is yes. I trained the neural net a few times, trying different dataset sizes and different methods of cropping the training data, and each time it would latch onto a different texture that it seemed to use as a placeholder for “human food”. Each one repetitive, of course. Would you like voidcake, floating dough, or terror blueberry?

It is seriously easy to try this yourself - you don’t need a fancy computer, or any coding skills. Got a camera and several hundred pictures of your cat? Use runwayml.com to generate your own monstrosities.

AI Weirdness supporters get bonus material: more amazing/horrible/mesmerizing generated images. Or become a free subscriber to get AI Weirdness posts in your inbox!

My book on AI is out, and, you can now get it any of these several ways! Amazon - Barnes & Noble - Indiebound - Tattered Cover - Powell’s - Boulder Bookstore (has signed copies!)