A.I.nktober: A neural net creates drawing prompts

There’s a game called Inktober where people post one drawing for every day in October. To help inspire people, the people behind Inktober post an official list of daily prompts, a word or phrase like Thunder, Fierce, Tired, or Friend. There’s no requirement to use the official lists, though, so people make their own. The other day, blog reader Kail Antonio posed the following question to me:

What would a neural network’s Inktober prompts be like?

Training a neural net on Inktober prompts is tricky, since there’s only been 4 years’ worth of prompts so far. A text-generating neural net’s job is to predict what letter comes next in its training data, and if it can memorize its entire training dataset, that’s technically a perfect solution to the problem. Sure enough, when I trained the neural net GPT-2 345-M on the existing examples, it memorized them in just a few seconds. In fact, it was rather like melting an M&M with a flamethrower.

My strategy for getting around this was to increase the sampling temperature, which means that I forced the neural net to go not with its best prediction (which would just be something plagiarized from the existing list), but something it thought was a bit less likely.

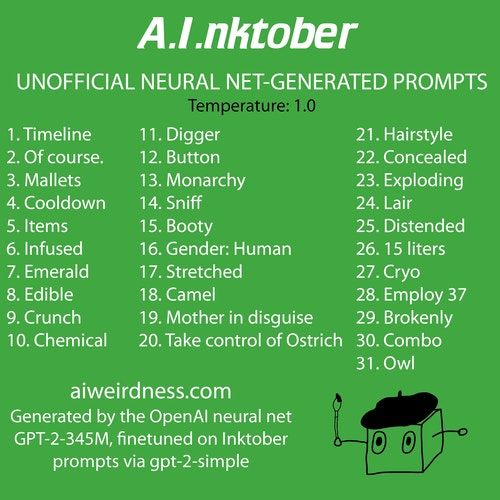

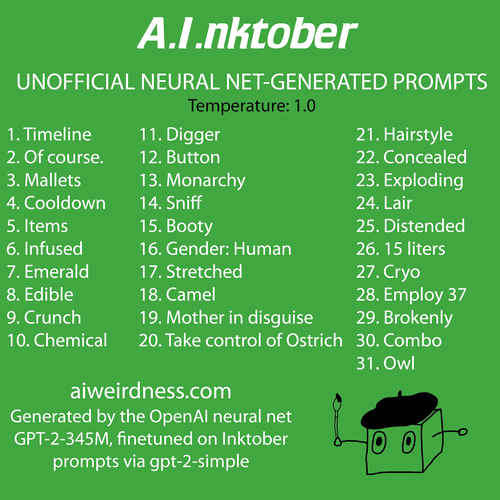

Temperature 1.0

At a temperature setting of 1.0 (already relatively high), the algorithm tends to cycle through the same few copied words from the dataset, or else it fills the screen with dots, or with the repeated words like “dig”. Occasionally it generates what looked like tables of D&D stats, or a political article with lots of extra line breaks. Once it generated a sequence of other prompts, as if it had somehow made the connection to the overall concept of prompts.

The theme is: horror.

Please submit a Horror graphic

This can either be either a hit or a miss monster.

Please spread horror where it counts.

Let the horror begin…

Please write a well described monster.

Please submit a monster with unique or special qualities.

Please submit a tall or thin punctuated or soft monster.

Please stay the same height or look like a tall or thin Flying monster.

Please submit a lynx she runs

This is strange behavior, but training a huge neural net on a tiny dataset does weird stuff to its performance apparently.

Where did these new words come from? GPT-2 is pretrained on a huge amount of text from the internet, so it’s drawing on words and letter combinations that are still somewhere in its neural connections, and which seem to match the Inktober prompts.

In this manner I eventually collected a list of newly-generated prompts, but It took a LONG time to sample these because I kept having to check which were copies and which were the neural net’s additions.

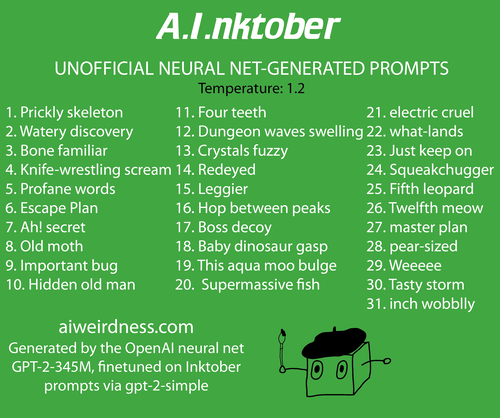

Temperature 1.2

So, I tried an even higher sampling temperature, to try to nudge the neural net farther away from copying its training data. One unintended effect of this was that the phrases it generated started becoming longer, as the high temperature setting made it veer away from the frequent line breaks it had seen in the training data.

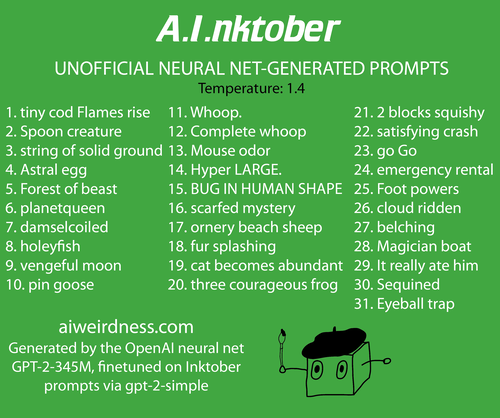

Temperature 1.4

At an even higher sampling temperature the neural net would tend to skip the line breaks altogether, churning out run-on chains of words rather than a list of names:

easily lowered very faint smeared pots anatomically modern proposed braided robe

dust fleeting caveless few flee furious blasts competing angrily throws unauthorized age forming

Light dwelling adventurous stubborn monster

It helped when I prompted it with the beginning of a list:

Computer

Weirdness

Thing

but still, I had to search through long stretches of AI garble for lines that weren’t ridiculously long.

So, now I know what you get when you give a ridiculously powerful neural net a ridiculously small training dataset. This is why I often rely on prompting a general purpose neural net rather than attempting to retrain one when I’ve got a dataset size of less than a few thousand items - it’s tough to thread that line between memorization and glitchy irrelevance.

One of these days I’m hoping for a neural net that can participate in Inktober itself. AttnGAN doesn’t quiiite have the vocabulary range.

AI Weirdness supporters get bonus content: An extra list of 31 prompts sampled at temperature 1.4. Or become a free subscriber to get new AI Weirdness posts in your inbox!

And if you end up using these prompts for Inktober, please please let me know! I hereby give you permission to mix and match from the lists.

Update: My US and UK publishers are letting me give away some copies of my book to people who draw the AInktober prompts - tag your drawings with AInktober and every week I’ll choose a few people based on *handwaves* criteria to get an advance copy of my book. (US, UK, and Canada only, sorry)

In the meantime, you can order my book You Look Like a Thing and I Love You! It’s out November 5 2019.

Amazon - Barnes & Noble - Indiebound - Tattered Cover - Powell’s