Lucid Deep Dreaming?

“Frodo Baggins delivering a pizza through the mines of Moria”

Remember my attempts to get CLIP+BigGAN to generate candy hearts? Here’s what an alternative method, CLIP+FFT, does with the prompt “a candy heart with a message”.

Rather than a single obsessively-scribbled-upon heart, we now have a vast universe of candy hearts, jostling against one another with their messages screaming incomprehensible love at the viewer.

As before, CLIP is the judge, telling another algorithm whether this collection of pixels looks more like “a candy heart with a message” than that collection of pixels. But this time, the algorithm presenting the images to CLIP isn’t steering through BigGAN, which was trained on a set of human photography. Instead, it’s doing something a lot more like the classic Deep Dream images, changing parts of the image to maximize how much it looks like dogs, or whatever the prompt is supposed to be.

(this cartoon is from my book You Look Like a Thing and I Love You: How AI Works and Why It’s Making the World a Weirder Place - out in paperback on March 23, 2021)

And since CLIP was trained on text and images that appeared together on the internet, it can be the judge of just about anything.

Here’s “A stegosaurus flying a spaceship among lasers”.

And it knows how to judge pop culture figures and even the look of TV shows. Here’s “Godzilla and Paul Hollywood in the Bakeoff tent”

Note that it not only correctly has the tent as white and pointy-roofed, it even is trying to do the Union Jack bunting. And it’s really sensitive to the prompt, so if you type “Godzilla and Paul Hollywood taking a selfie in the Bakeoff tent” instead, Paul Hollywood breaks out into a grin and cameras appear. (it seems to be less sure what a grinning Godzilla looks like)

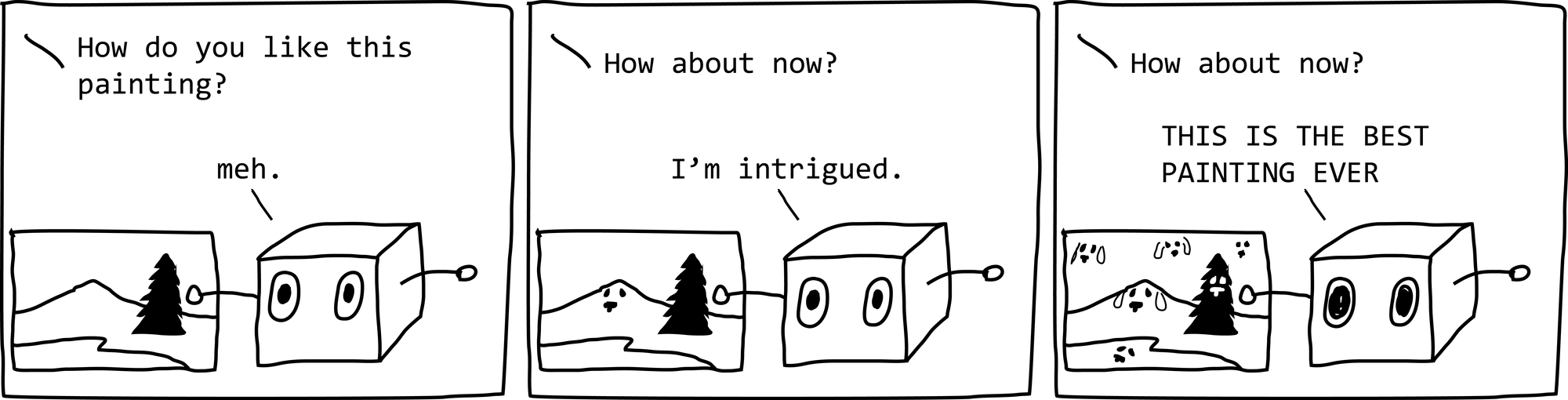

Here’s “Mr Darcy emerges from a lake in a white shirt while his horse looks on”

It does less well to my mind when there are fewer clues about what the background should look like. Tell it just to do “Tyrannosaurus Rex” and things get very abstract and smeary, and it even resorts to trying to write “tyrannosaurus” everywhere.

“A tyrannosaurus wearing a crinoline hoop skirt on a fashion show runway” looks a bit more realistic. Or maybe that’s just my preference. The trees in the background are a nice touch.

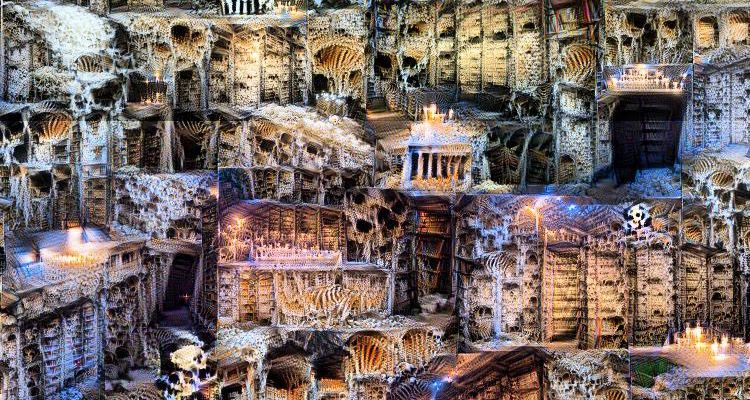

Here’s a zoomed-in view of one of the best ones: “a library made of bones and skeletons; a library in the style of catacombs”. It doesn’t seem to resort to word graffiti if the prompt suggests a finely textured background, maybe. (This may have been from a newer version of the CLIP+FFT notebook, so that could explain some of the improved quality.)

You do need a bit of imagination maybe to figure out what the original prompts were, so I wouldn’t exactly say that CLIP+FFT as successful as making images to order as the original CLIP+DALL-E (still not released publicly). But having a neural net that will attempt whatever I ask for (and not turn every human into a horror of many-eyed blobs) is still pretty fun.

“The daleks have filled the tardis with llamas and David Tennant is annoyed”

Read more about CLIP+FFT (built by Vadim Epstein) and try it yourself for free with the colab notebook!

I made a bonus gallery of various characters delivering pizza. Spider-man’s not the only one who’s recognizable with a fresh pie in hand. To see the gallery, and get other bonus content, become an AI Weirdness supporter! Or become a free subscriber to get new AI Weirdness posts in your inbox.