Generating images from an internet grab bag

Still amazed by this:

Here's CLIP+VQGAN (trained on internet photos and their accompanying text), prompted two different ways:

"A car driving down a desert road in monument valley"

"A car driving down a desert road in monument valley | dramatic atmospheric ultra high definition free desktop wallpaper"

The SAME algorithm was capable of producing both images but thought the first one was a better answer until I changed my question.

That's a problem with training AIs on huge amounts of internet data - a lot of that data isn't going to be what you want, and the AI doesn't know the difference.

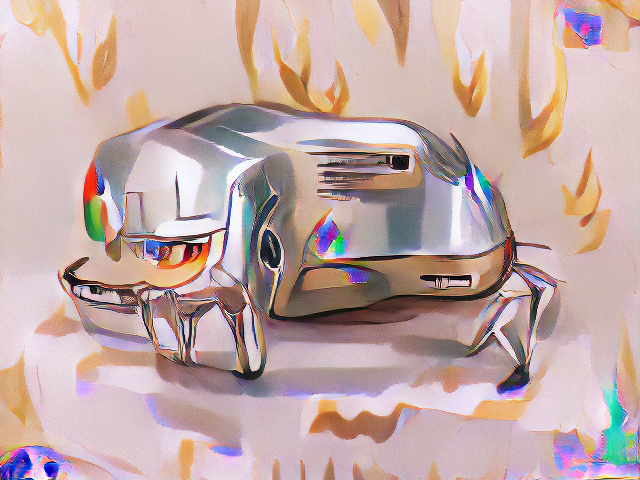

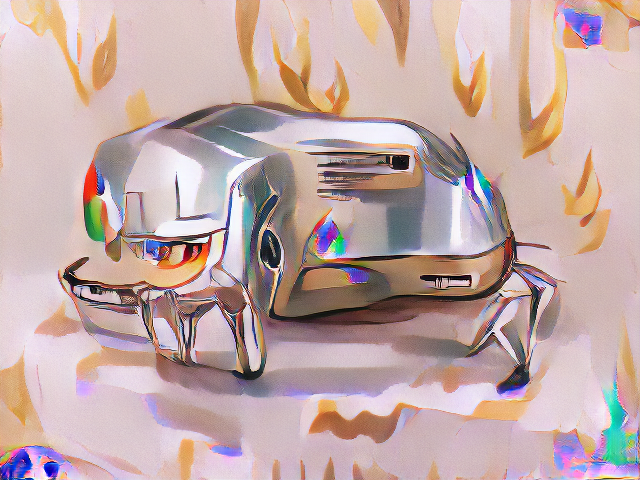

Here's "a toaster"

The toaster is partially made of toast so I tried to get it to generate a toaster made of chrome instead. Turns out I don't think I can get it to do a toaster made of chrome without in some way incorporating the logo of Google Chrome. General internet training seems to poison certain keywords.

We may never know all that's in internet training data, and yes, that should scare us a little.

I generated a whole bunch more toasters that I collected as bonus content. Become an AI Weirdness supporter to get it! Or become a free subscriber to get new AI Weirdness posts in your inbox.

You can try CLIP+VQGAN for free by following the instructions in this tutorial (no coding or Spanish language skills necessary.)