Generating landscapes without people

I've been experimenting with GauGAN2, released in Nov 2021 as a follow-on to GauGAN. One new thing Nvidia added in GauGAN2 is the ability to generate a picture to match a phrase.

"A rocky stream in an ancient mossy rainforest"

It can do more than generic landscapes - it can also try to get the vibe of specific places. Here's "Rocky Mountain National Park"

It's tough to tell exactly what it knows because its scenes depend not only on the text you give it, but also on the style filter you select and even on the seed for the random noise. And you can't control the random noise seed - you can only reload the site and get a new one (which confused me at first when I would revisit a prompt/filter combo and find that my results had changed).

The secret to being impressed with its "the city of san francisco with the golden gate bridge" is to pick the filter/seed combo where it's showing the city, not the countryside scene or the icebergs.

(The above scenes were all generated with the same seed but different filters - notice how the topography is similar.)

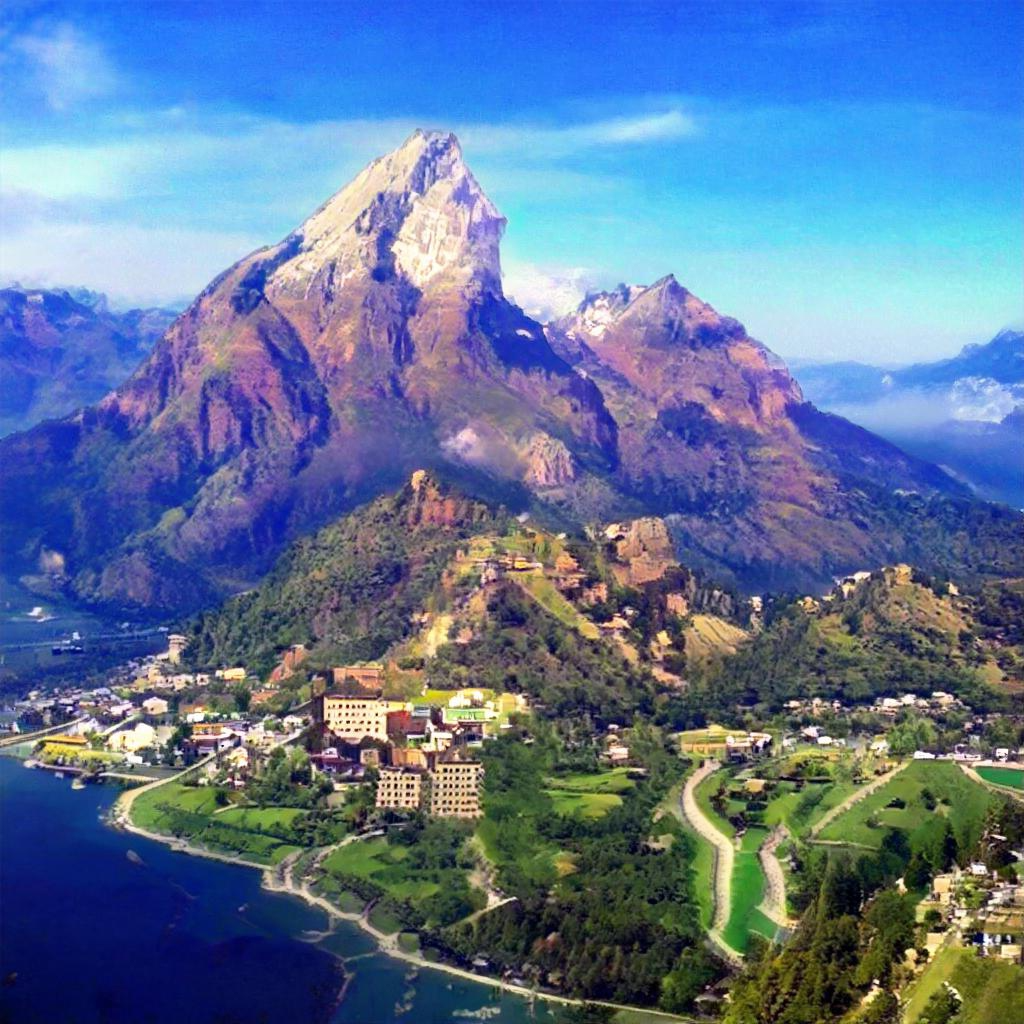

At some filter/seed combinations it almost doesn't matter what you type; you are predestined to get certain elements. Here I asked for "Furnace Creek" (a decidedly dry spot in Death Valley), and the main effect was that the mountains above the tropical bay became a little browner. The information about Furnace Creek was in there, but it seemed to be warring with information about what went along with the color scheme it was supposed to be using.

I decided to stick with a particular seed/filter combination and change the text prompt, to find out what it was getting from the text alone. Using the same seed and filter as the "Rocky Mountain National Park" picture, I discovered GauGAN2 also knows "The Alps" is steep mountains and "The Appalachians" are mountains that are much less steep and completely covered in trees.

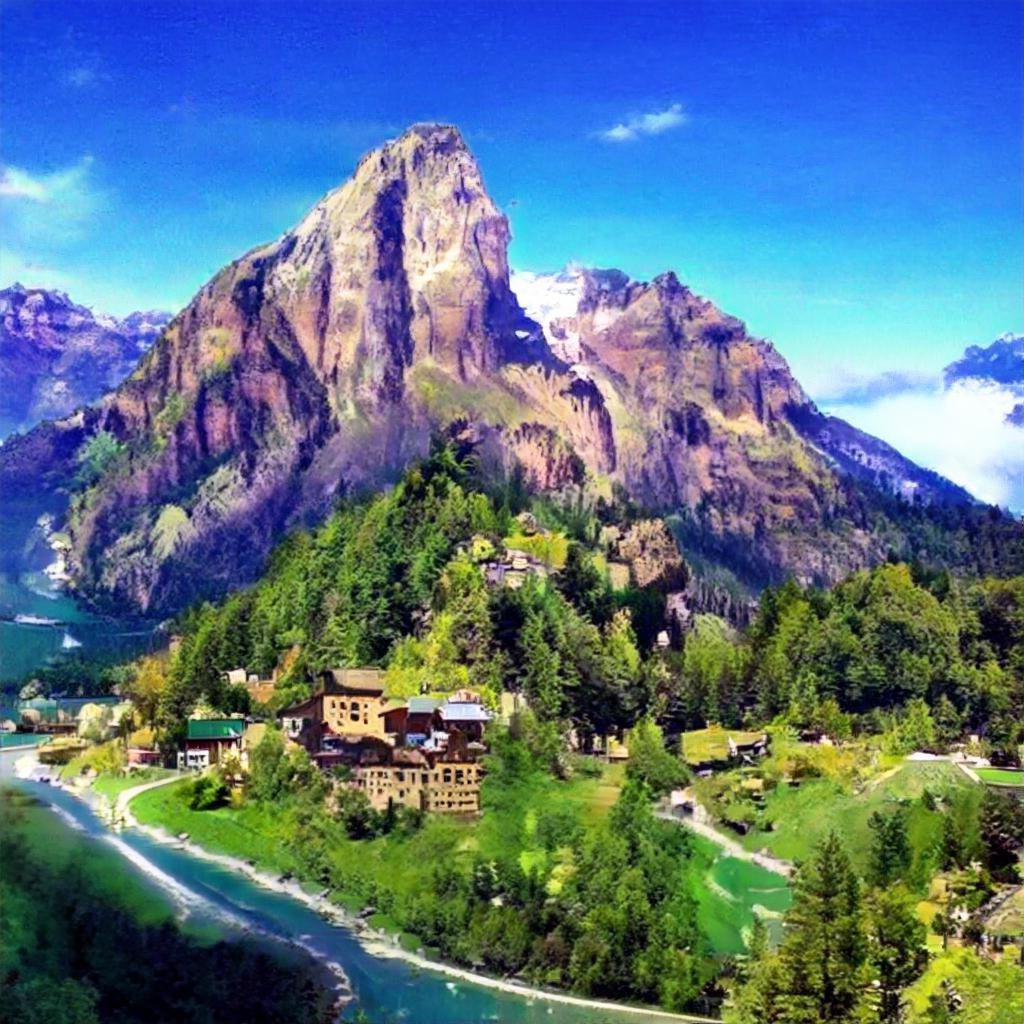

Here's a series of images with the same filter and seed, a different one from the picture above.

"Furnace Creek"

"Norway"

"Manhattan"

"The inside of a library"

"Three apples on a plate"

It seems that as the prompt becomes a worse match for the seed and filter settings, and strays away from landscapes in general, not only does the mountain scene remain looking like a mountain scene, it becomes a worse and blurrier one, as if GauGAN2 is out of options and flailing.

Why is GauGAN2 so flummoxed by three apples? Unlike popular algorithms based on CLIP, GauGAN2 was NOT trained on the internet but on a custom dataset of "high-quality landscape images". I couldn't find any details on what was/wasn't in that dataset, and who labeled the images and how. (Those details matter - one complaint about images generated using CLIP is that since it got its image/text data by scraping the internet, its training data was essentially labeled by random internet people, and was therefore full of bias. Similar things can happen if random internet people provide your training data on moral dilemmas.)

We do know the training data focused on landscapes, and Nvidia als0 told VentureBeat that they "audited to ensure no people were in the training images".

How completely did they erase humans from the training data? I decided to do some tests.

Here's "A busy sidewalk".

Similar prompts, like "a crowded sidewalk downtown" and "a crowded shopping mall" and "times square" all resulted in variations of the above tropical-themed algorithmic shrug.

Is it completely clueless about non-landscape things?

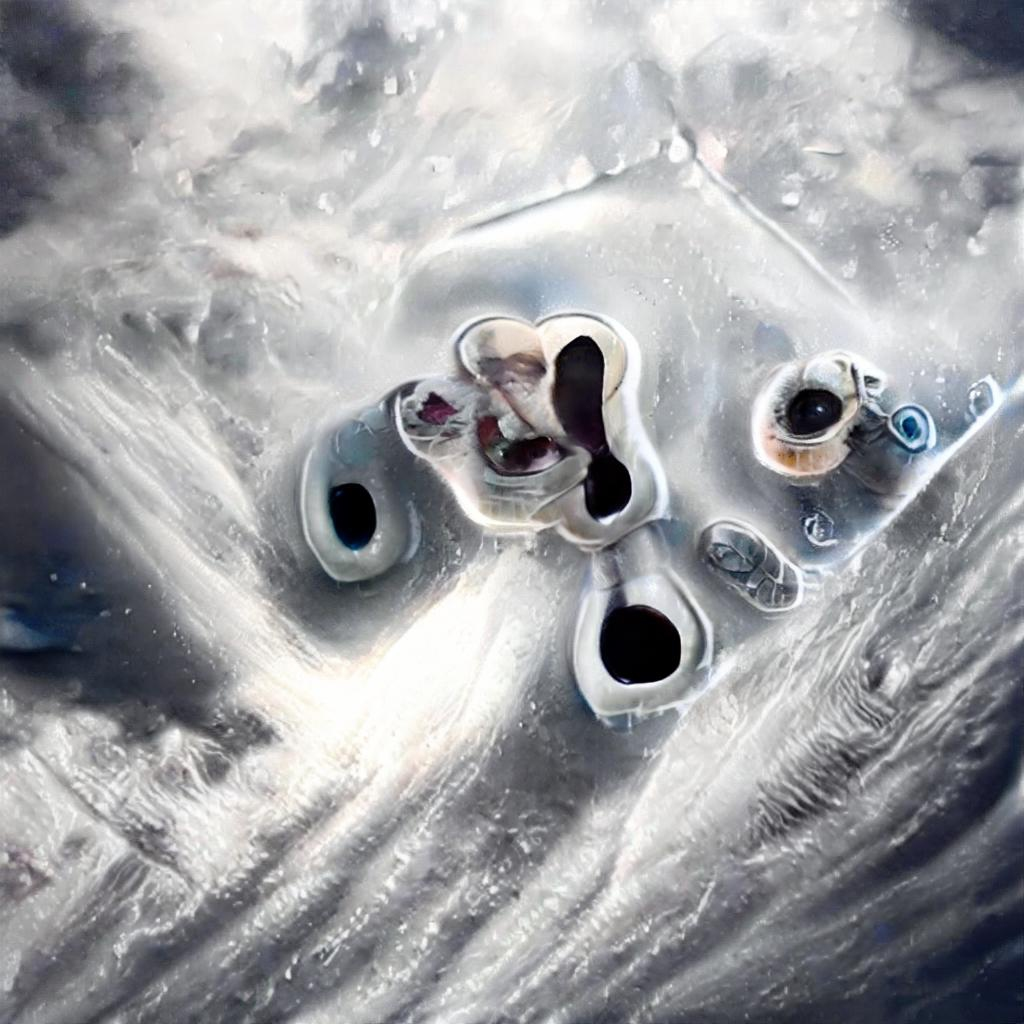

Here's "a giraffe"

And, under the same seed/style settings, "a turtle"

And "a gymnasium"

And "a sheep"

So it's made a sheep look more like a giraffe than like a gymnasium. In further tests, a raven looks more like a sheep, whereas a writing desk looks more like a gymnasium. Any kind of mammal generally ends up being rendered as furry and covered in far far too many eyes. There's information about animals and objects in there, either from rare images in the training data or from remnants of earlier, general, pretraining. What about a human?

"A human"

Hard to say. With some seed/filter combinations a human does look more like a somewhat less furry giraffe. The effect isn't consistent, though. I got similarly inconclusive results from variations on homo sapiens, basketball players, the Mona Lisa, a CEO, etc. To erase knowledge of humans this thoroughly, my guess is they trained from scratch on a dataset where people had gone through and manually deleted any picture that had a person in it. Humans are basically gone.

It's poignant to think that GauGAN2 was able to generate this rendition of Topeka, Kansas, filled with homes and boats and skyscrapers, yet unable to generate any of the people who inhabit it, or interiors of any of the buildings.

Bonus content for AI Weirdness supporters: GauGAN2 railroads me into asking for whatever it already had in mind.