AI is based on math so it is correct

Since OpenAI released CLIP, trained on internet pictures and their nearby text, people have been using it to generate images. In all these methods - CLIP+Dall-E, CLIP+BigGAN, CLIP+FFT, CLIP+VQGAN, CLIP+diffusion - you come up with a text prompt, some algorithm presents its images to CLIP, and CLIP's role is to judge how well the images match the prompt. With CLIP's judgements for feedback, the algorithm can self-adjust to make its images match the prompt.

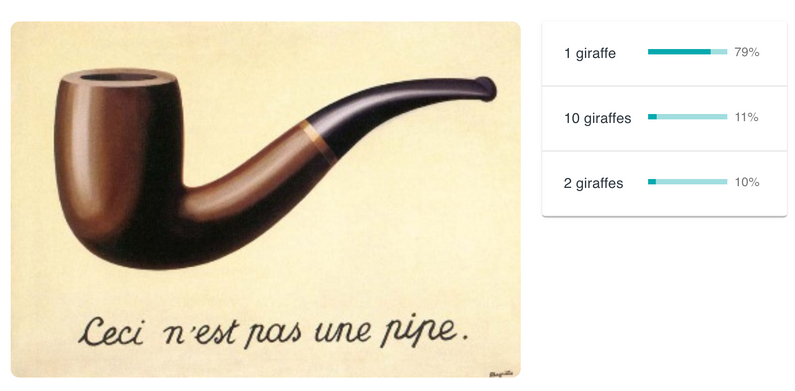

But we also do the reverse and set up an app where you give CLIP an image, and then CLIP judges how well text matches the image.

One such app is CLIP+backpropagation. The way it works is I upload an image, and then type a few example captions. Then CLIP rates each caption by how well it matches my image.

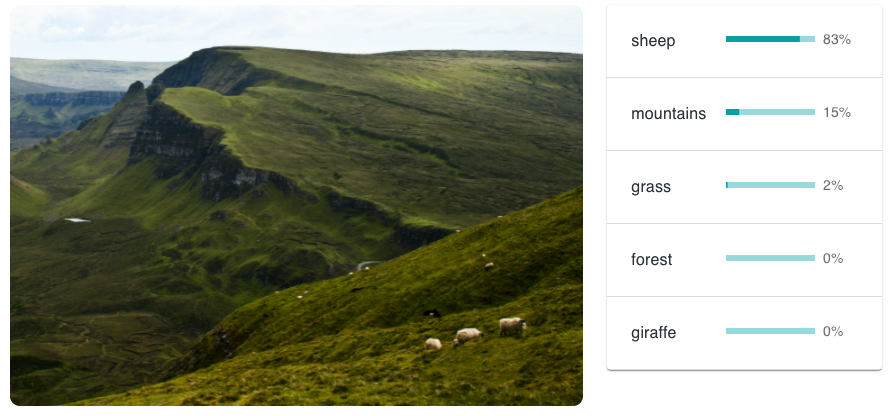

There's more grass than sheep in this picture, but CLIP picks up strongly on the sheep. This makes sense, since it's trained on the way people tend to describe photos online - most people wouldn't highlight the grass when describing this photo.

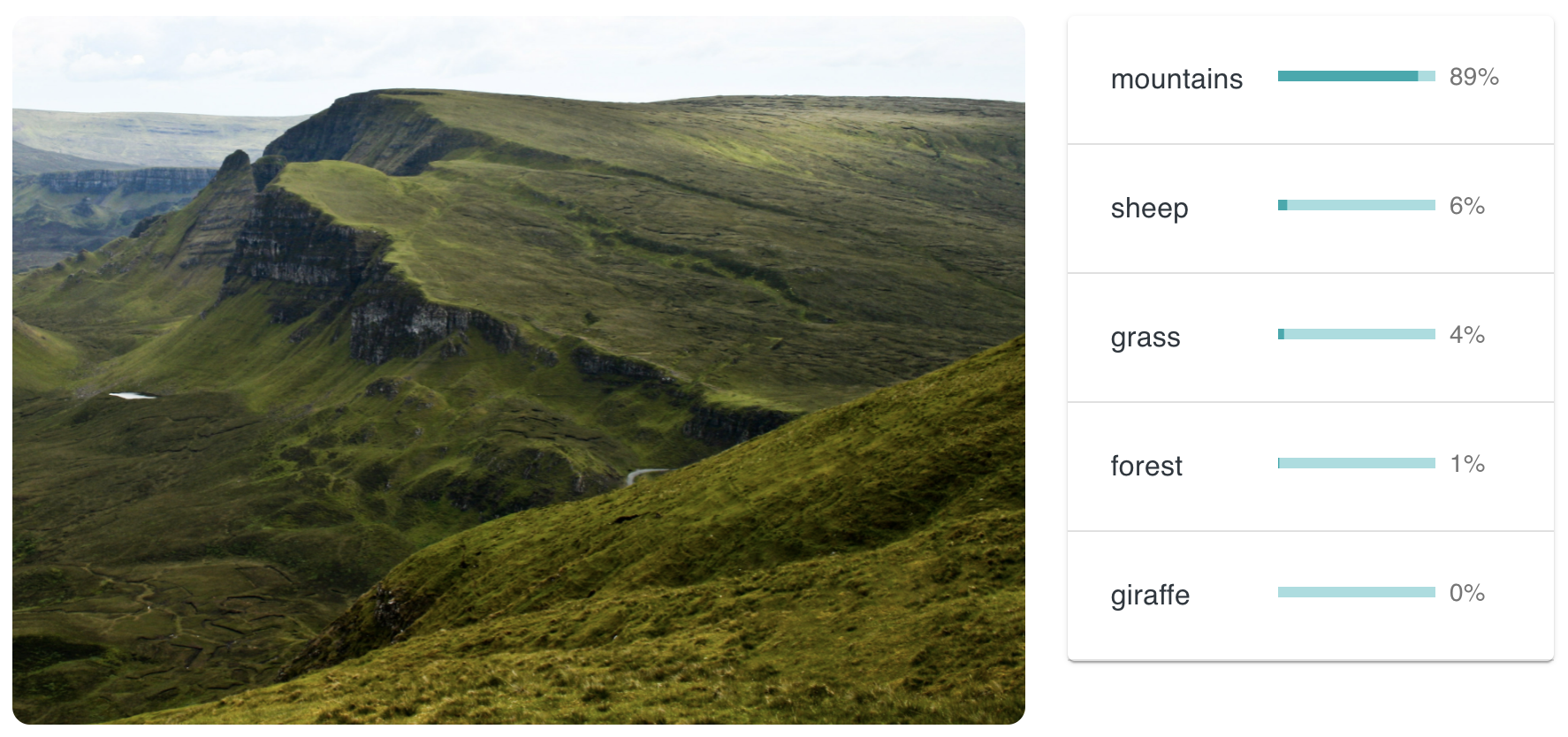

And when I erase all the sheep and try again, now it prioritizes the mountains. (Although it's hedging its bets with a small possibility of sheep.)

This may be progress versus an earlier image recognition algorithm that still reported sheep in the second image. I say may be, because we don't know how confident the AIs were about the sheep in either case. The earlier AI may have reported sheep even if it wasn't very confident about them. Whereas CLIP+backpropagation assumes that one of my potential captions is the correct answer, and so it makes all its probabilities add up to 100%.

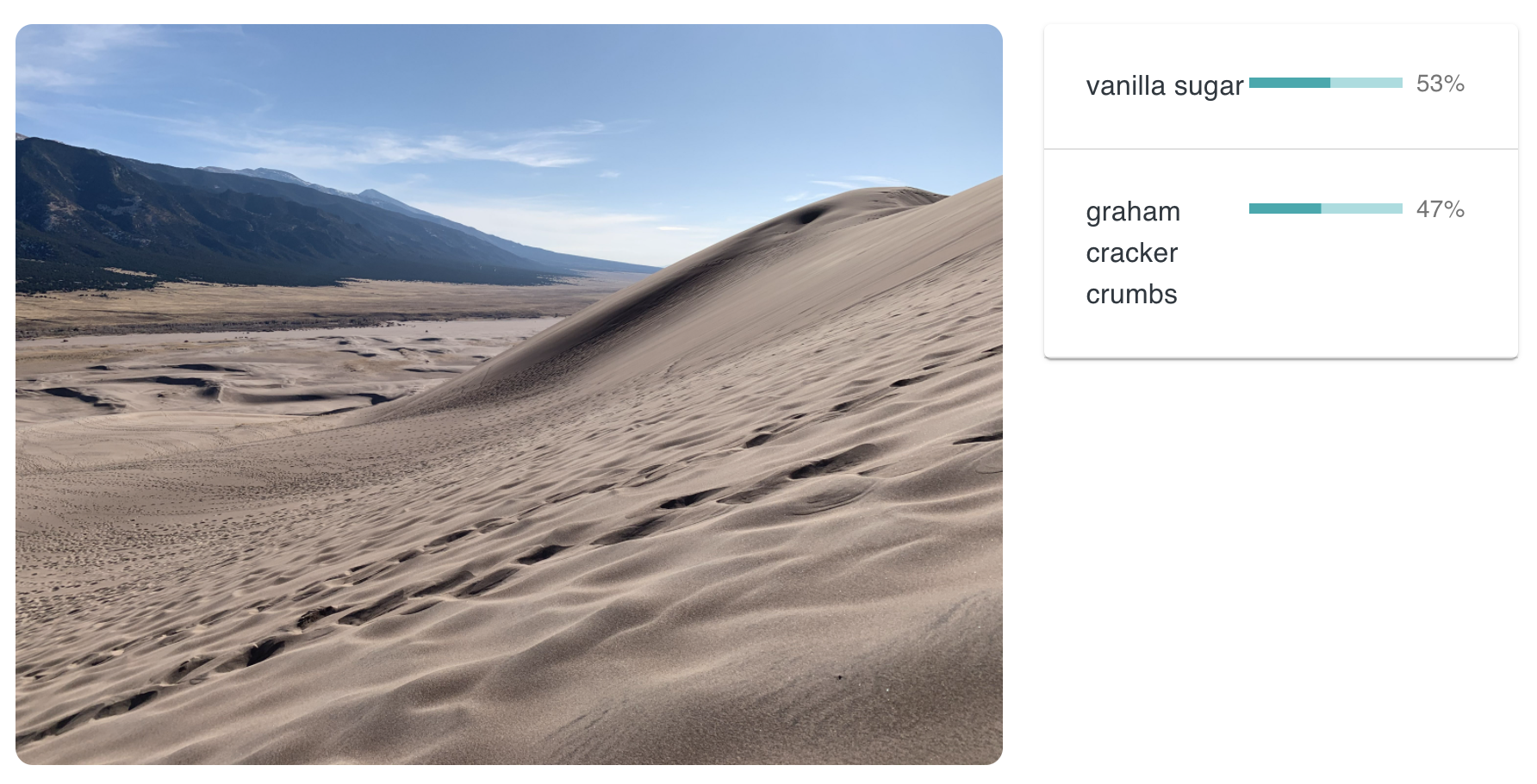

If I give it captions to choose from that aren't a good match, it still has to choose something.

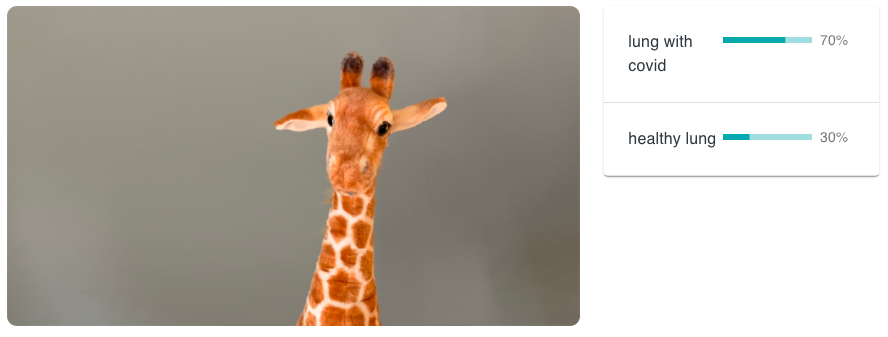

The phenomenon does lend itself to certain abuses.

And it's also clear how without a way to express uncertainty, we can end up with a very broken AI setup that is still confidently returning answers.

It should be noted that my sabotage is only partially responsible for the AI's mistakes.

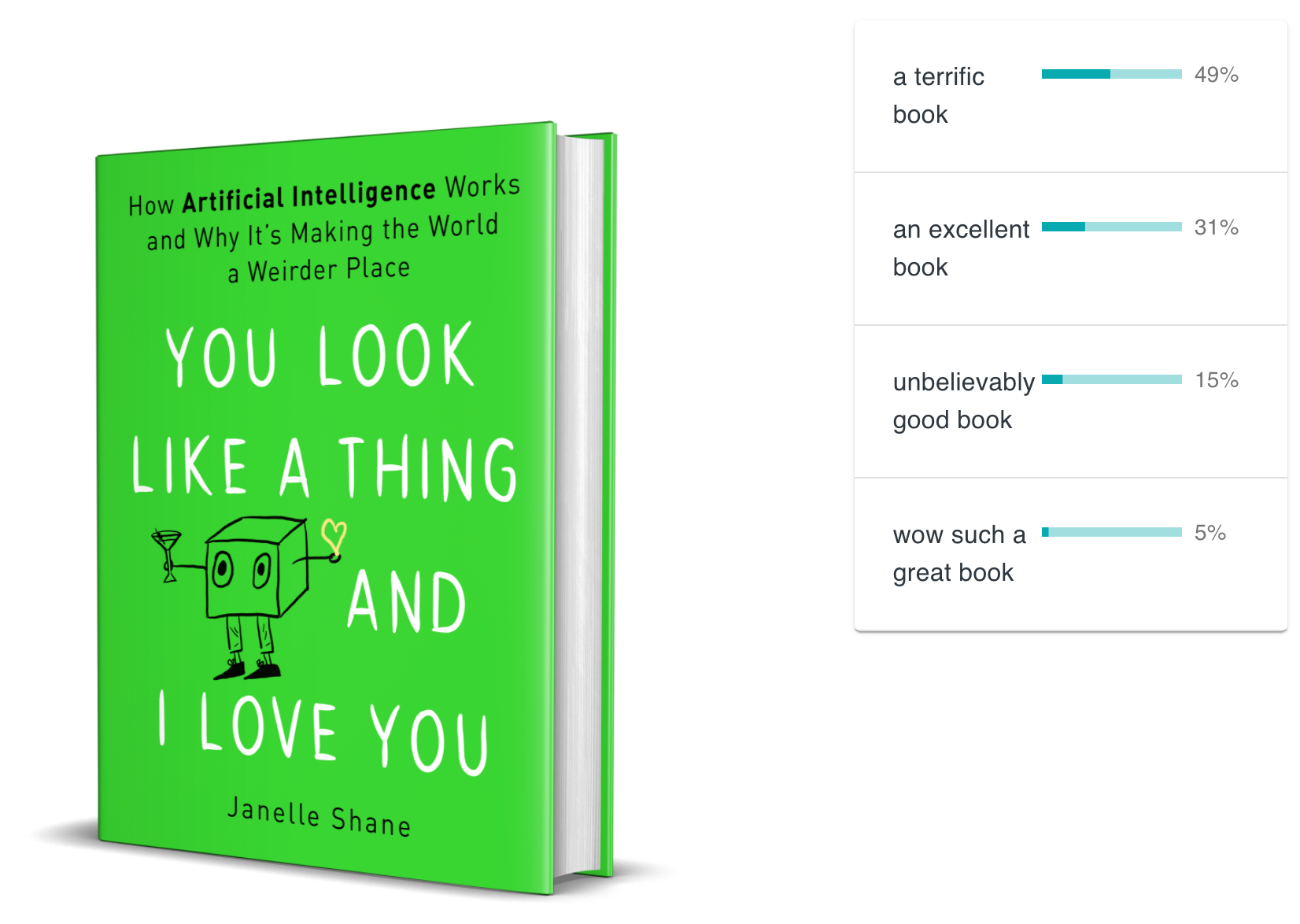

Still, I think we can all agree that the AI is very correct sometimes.

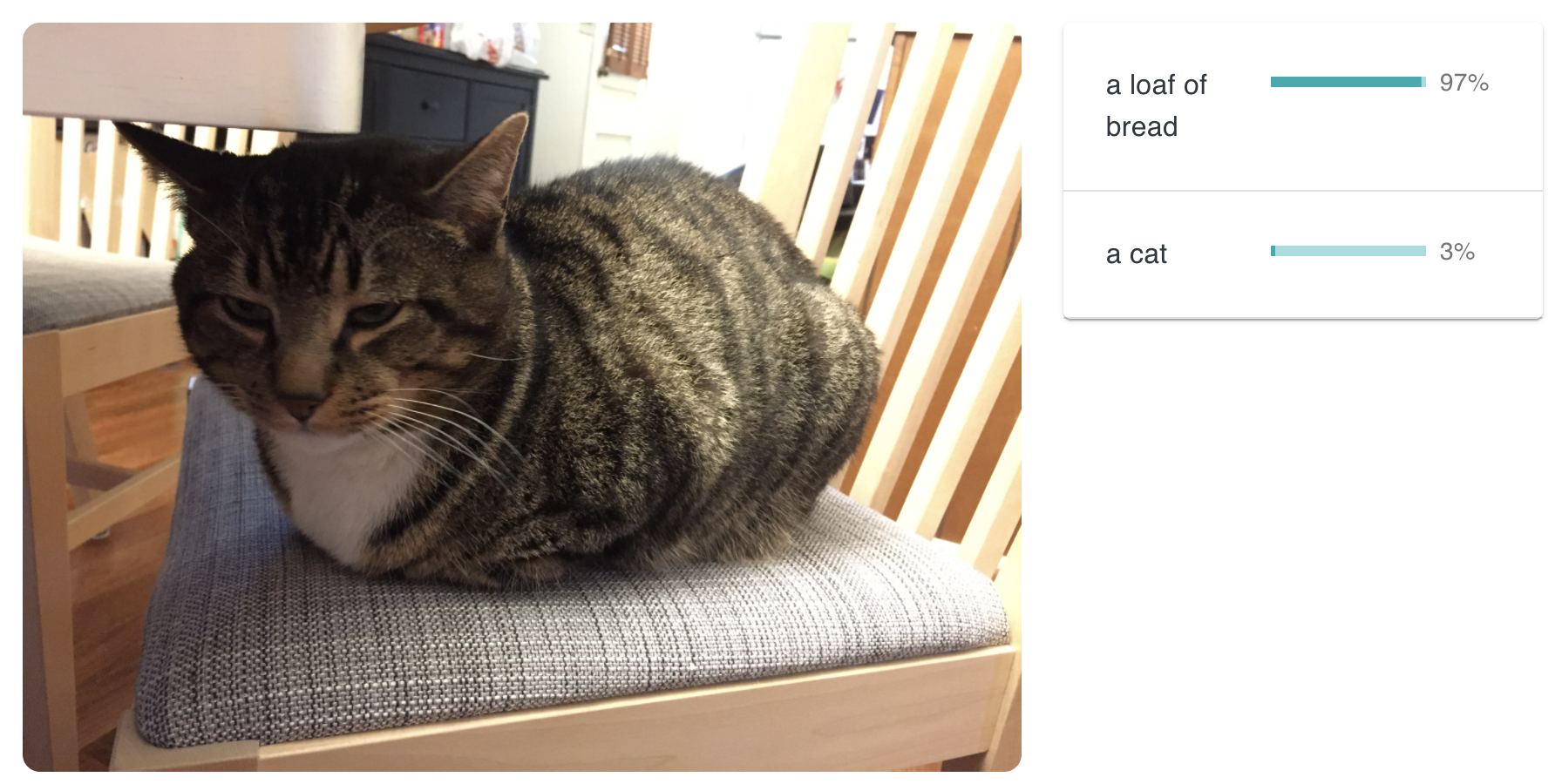

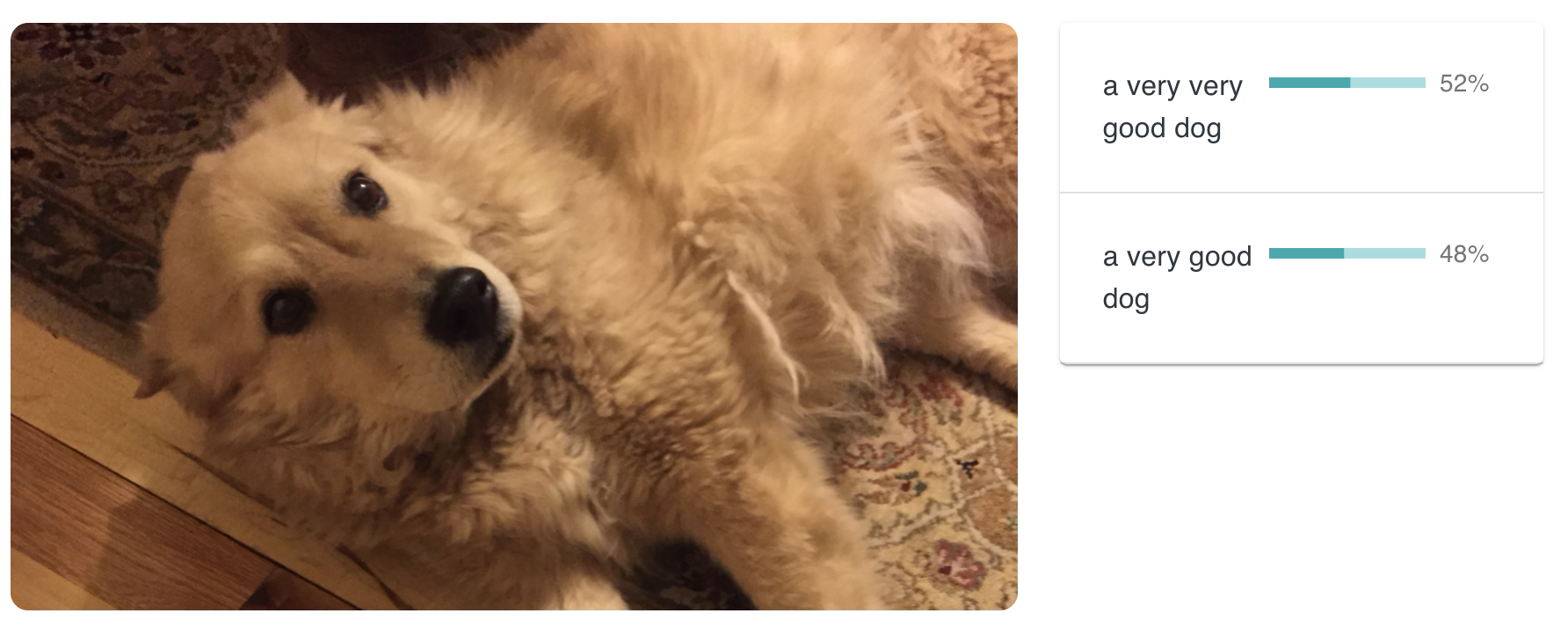

Bonus post: more snap judgements from CLIP backpropagation (they're mostly of my cat).