For a couple [https://www.aiweirdness.com/botober2021/] of

[https://www.aiweirdness.com/628160900506025984/] years

[https://www.aiweirdness.com/ainktober-a-neural-net-creates-drawing-19-09-26/]

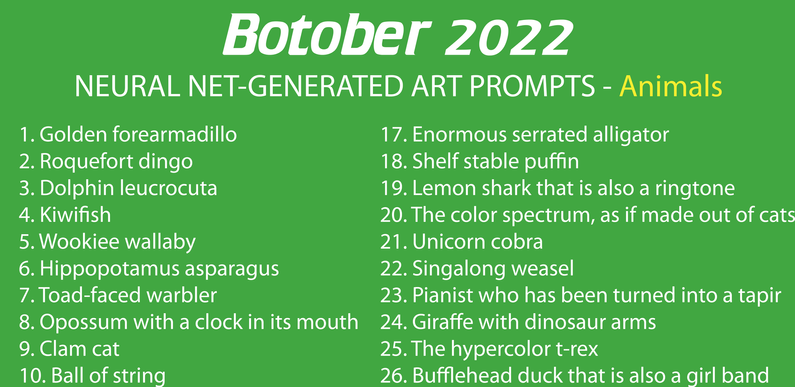

now I've been using neural networks to generate daily drawing prompts. With

today's text-generating neural networks far too large to finetune on a list of

existing