AI Weirdness advent calendar 2023

It's 2023 and the combo of GPT-4/DALL-E3 can generate passable versions of the saccharine Christmas drawings in an advent calendar. They cannot, however, label them correctly. Also sometimes you get sweatermugs. This means the 2023 AI-generated advent calendar is happening!

Full descriptions of every door in the calendar

1."snodlee" - a bowl of cookies and either snowballs or hard boiled eggs.

2. "Snov Phidde" - a snowman in an ornament, with its mittened hands raised in triumph.

3. "Cookle" - one enormous and two tiny candles in the same dish.

4. "Fendli" - a single snowflake.

5. "Frristke" - a festive bunch of holly with a ribbon.

6. "Elf" - a vintage-looking pink drink with holly falling into it.

7. "Pmelig" - a cute elf with an unreadable caption. The first letter is something like a p or an m, and the last letter is something like a g or an i.

8. "Hot ocoate" - a mug of hot chocolate, except the mug is made out of a sweater with trailing arm-tentacles.

9. "Christmang" - a bundt cake with frosting.

10. "Piod" - a cup of soup with a candy cane and holly in it.

11. "Hgfty tpe" - a present drawn as a halftoned illustration.

12. "Hendeer" - a fireplace hung with stockings, one of which has two toes and no heel.

13. "Stocking" - a glass of milk topped with a santa hat.

14. "Slelly" - a sleigh bell sitting on a set of mince pies.

15. "Snowsman" - a snowman.

16. "Gells" - an opaque red drink capped in a sharp layer of white, with a red bendy straw in it.

17. "Gedowmad" - a gingerbread house.

18. "Ice skates" - a boot (with no ice skating blade on it).

19. "mkle boon" - some cookies dotted with either holes or extremely dense currants.

20. "Snow glob" - a snow globe containing a christmas tree.

21. "Elw" - an ornament containing other ornaments.

22. "Fuielplce" - a snowman in a festive sweater.

23. "Snowsmino" - a cup of cocoa with very tall whipped cream and two cinnamon sticks, with two broken hollow candy canes in the foreground.

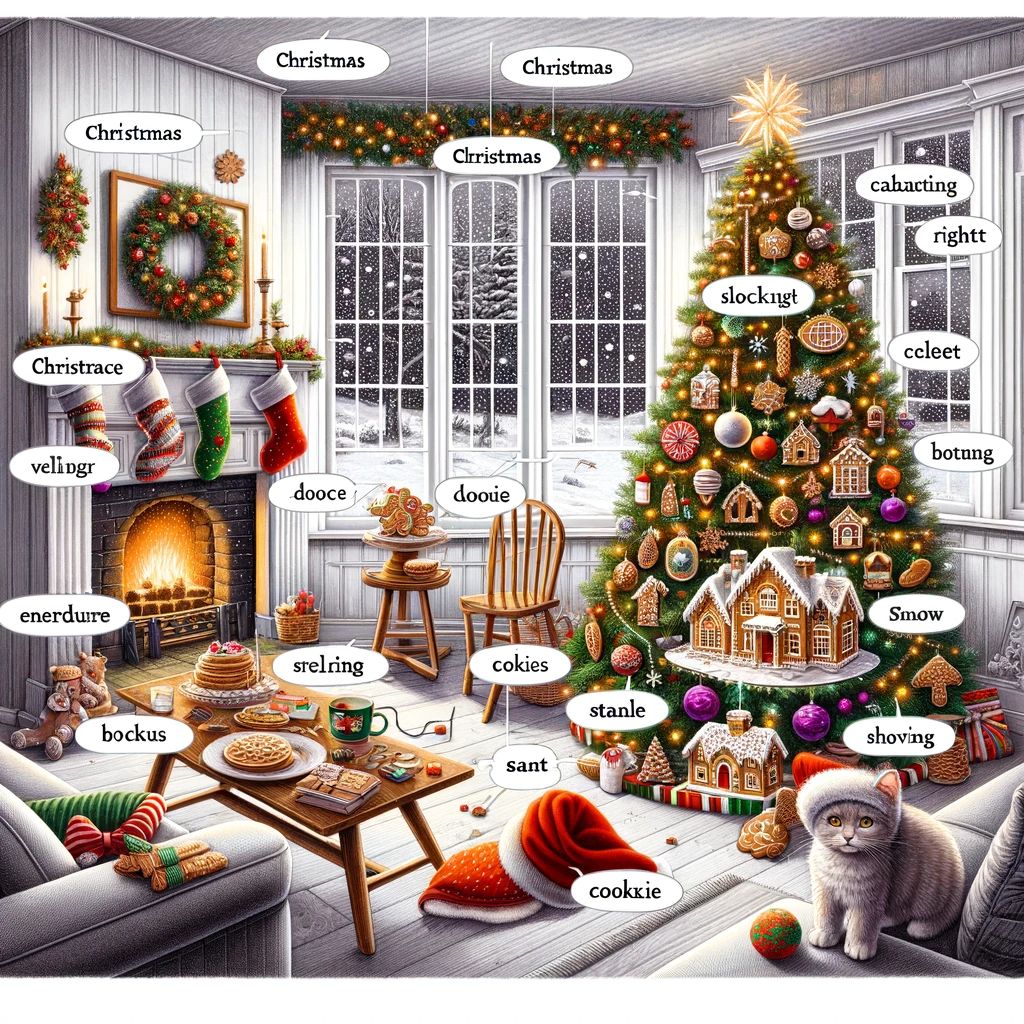

24. An indoor Christmas scene with a tree (unlabeled) and a train set consisting entirely of locomotives and pieces of locomotives ("choot,r"). A chair ("chaiir") has a cushion labeled treee, and there are cookies strewn all over the floor ("cook'es"). The window has a door handle and a mistletoe labeled "ee".

How this worked: I'm using ChatGPT4, which has an interface with DALL-E3. I discovered pretty quickly that I couldn't get labeled images by asking directly for labeled images from an advent calendar, or even for a set of simple Christmas-themed images. Rather than label them with letters, it would add numbers each time:

I ended up having to ask for something else: I asked it for a "set of Christmas-themed images, clearly labeled in capital letters as an aid to someone learning English." I could then pick my favorite images out of the set.

How does it end up with so many mislabelings and misspellings? It seems to be DALL-E3's fault. When I ask ChatGPT4 to ask DALL-E3 to generate something, rather than send my request directly to DALL-E3, ChatGPT4 changes it. For the image above, my prompt was "Please generate another grid with the next set of Christmas-themed images, clearly labeled in capital letters as an aid to someone learning English. Also please print the full text of the prompt used to generate the image." What ChatGPT4 reports it actually sent to DALL-E3 was:

A grid of six new Christmas themed images on a white background, each clearly labeled in all capital letters to assist English learners. Top left: A Christmas wreath, labeled 'WREATH'. Top center: A gingerbread man cookie, labeled 'GINGERBREAD MAN'. Top right: A pair of ice skates, labeled 'ICE SKATES'. Bottom left: A snow globe, labeled 'SNOW GLOBE'. Bottom center: A Christmas sweater, labeled 'SWEATER'. Bottom right: A cup of hot chocolate, labeled 'HOT CHOCOLATE'. Each label is in a bold, clear font for easy reading.

I can't know for sure if this is the real prompt, but it does match the beginning of the filename auto-generated by DALL-E3. In which case it seems ChatGPT4 is all "Gingerbread man" and DALL-E3 is all, "got it, gingerbowman". This gets particularly funny when I ask it to do an entire scene. See, for example, the image for Dec 24:

When there's more than 9 items, and they aren't in a grid any more, it's more obvious that Dall-E's not matching labels with items, just scattering them around. Here's another Christmas scene.

One big difference between AI-generated images and human-created art is that the longer you look at the AI stuff, the worse it looks. What are those things on the floor? Why is the snow falling in a grid? What is the red thing floating in the coffee mug? And why is the mug sending filaments snaking out toward the tree? Merry Clrristmas I guess!

Bonus content: some more classic Christmas scenery, helpfully labeled by DALL-E3.