It takes a bot to know one?

A couple of weeks ago, I wrote about GPT-2, a text-generating algorithm whose huge size and long-term analysis abilities mean that it can generate text with an impressive degree of coherence. So impressive, in fact, that its programmers at OpenAI have only released a mini version of the model for now, worried that people may abuse the full-size model’s easy-to-generate, almost-plausibly-human text.

(below: some text generated by mini-GPT-2, in response to the prompt in italics)

This was a fantastic recipe for chocolate cake with raspberry sauce! I only made a couple of changes to the recipe. First, I added vanilla candles instead of meringues for a more mild and exotic fragrance. Once again, I only used 1 tsp of vanilla syrup for clarity. Second, the chocolate cake whipped cream was tempered by an additional 1 tsp of canola oil. The regular vegan whipped cream is soothing and makes it pleasing to the hungry healthiest person I know!

In the meantime, as OpenAI had hoped, people are working on ways to automatically detect GPT-2′s text. Using a bot to detect another bot is a strategy that can work pretty well for detecting fake logins, video, or audio. And now, a group from MIT-IBM Watson AI lab and Harvard NLP has come up with a way of detecting fake text, using GPT-2 itself as part of the detection system.

The idea is fairly simple: GPT-2 is better at predicting what a bot will write than what a human will write. So if GPT-2 is great at predicting the next word in a bit of text, that text was probably written by an algorithm - maybe even by GPT-2 itself.

There’s a web demo that they’re calling Giant Language model Test Room (GLTR), so naturally I decided to play with it.

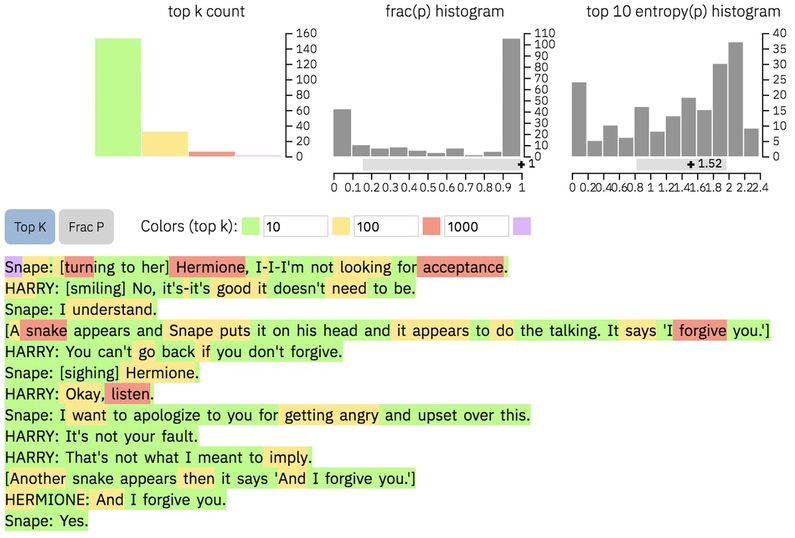

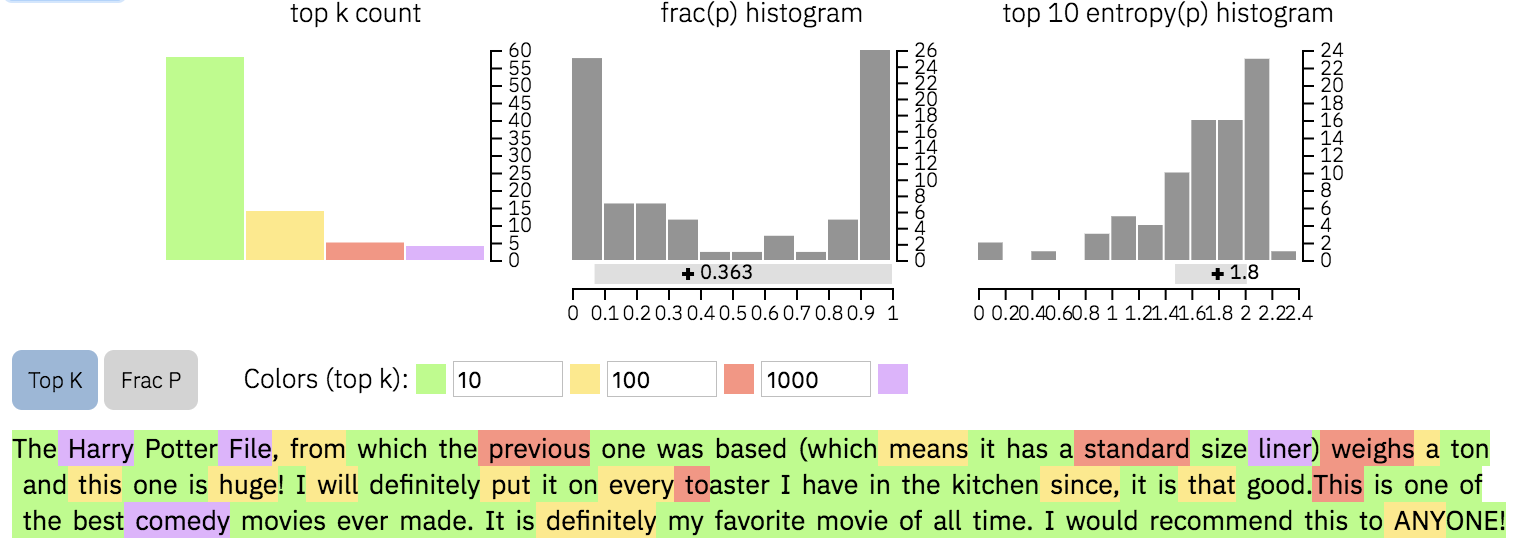

First, here’s some genuine text generated by GPT-2 (the full-size model, thanks to the OpenAI team being kind enough to send me a sample). Green words are ones that GLTR thought were very predictable, yellow and red words are less predictable, and purple words are ones the algorithm definitely didn’t see coming. There are a couple of mild surprises here, but mostly the AI knew what would be generated. Seeing all this green, you’d know this text is probably AI-generated.

![HERMIONE: So, you told him the truth?

Snape: Yes.

HARRY: Is it going to destroy him? You want him to be able to see the truth.

Snape: [turning to her] Hermione, I-I-I'm not looking for acceptance.

HARRY: [smiling] No, it's-it's good it doesn't need to be.

Snape: I understand.

[A snake appears and Snape puts it on his head and it appears to do the talking. It says 'I forgive you.']

HARRY: You can't go back if you don't forgive.

Snape: [sighing] Hermione.

HARRY: Okay, listen.

Snape: I want to apologize to you for getting angry and upset over this.

HARRY: It's not your fault.

HARRY: That's not what I meant to imply.

[Another snake appears then it says 'And I forgive you.']

HERMIONE: And I forgive you.

Snape: Yes. HERMIONE: So, you told him the truth?

Snape: Yes.

HARRY: Is it going to destroy him? You want him to be able to see the truth.

Snape: [turning to her] Hermione, I-I-I'm not looking for acceptance.

HARRY: [smiling] No, it's-it's good it doesn't need to be.

Snape: I understand.

[A snake appears and Snape puts it on his head and it appears to do the talking. It says 'I forgive you.']

HARRY: You can't go back if you don't forgive.

Snape: [sighing] Hermione.

HARRY: Okay, listen.

Snape: I want to apologize to you for getting angry and upset over this.

HARRY: It's not your fault.

HARRY: That's not what I meant to imply.

[Another snake appears then it says 'And I forgive you.']

HERMIONE: And I forgive you.

Snape: Yes.](https://www.aiweirdness.com/content/images/public/images/a2664a84-6f90-421e-bbcc-f24594d84951_1354x916.png)

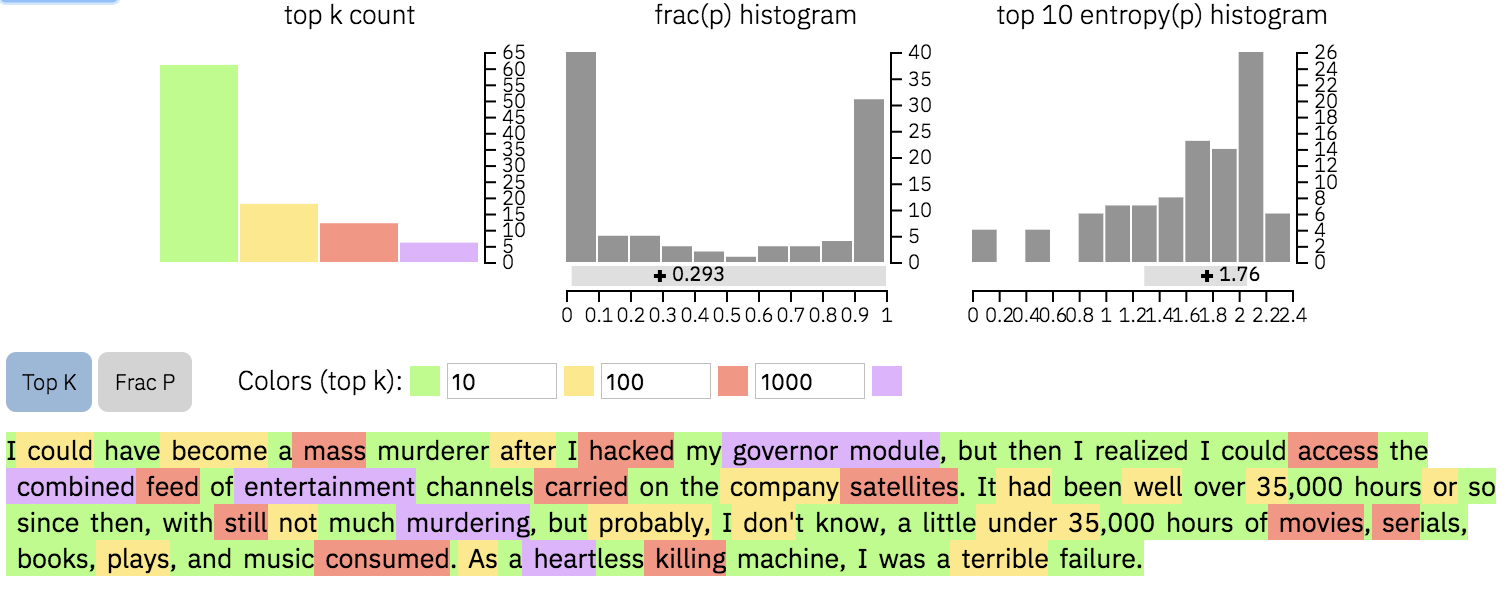

Here, on the other hand, is how GLTR analyzed some human-written text, the opening paragraph of the Murderbot diaries. There’s a LOT more purple and red. It found this human writer to be more unpredictable.

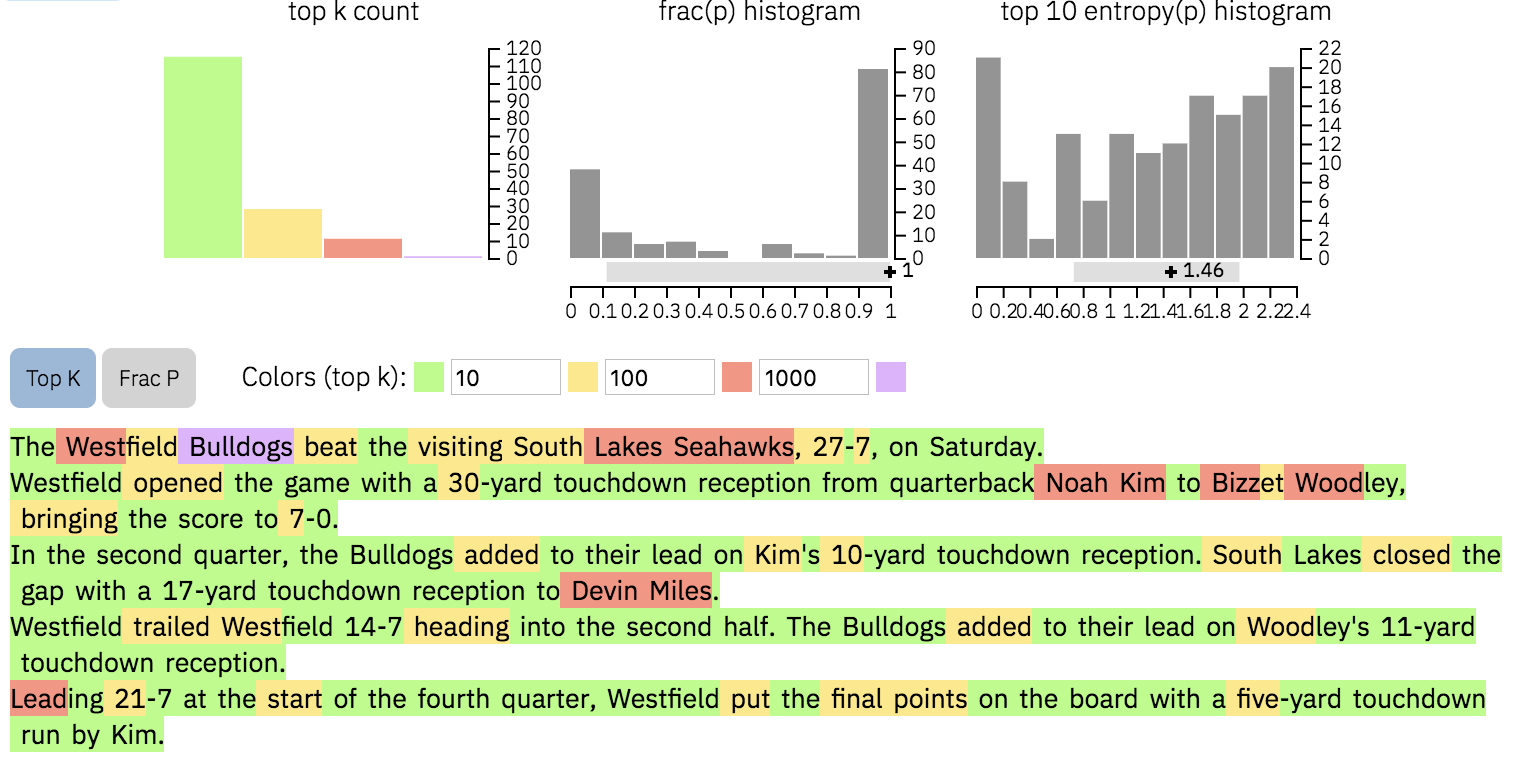

But can GLTR detect text generated by another AI, not just text that GPT-2 generates? It turns out it depends. Here’s text generated by another AI, the Washington Post’s Heliograf algorithm that writes up local sports and election results into simple but readable articles. Sure enough, GLTR found Heliograf’s articles to be pretty predictable. Maybe GPT-2 had even read a lot of Heliograf articles during training.

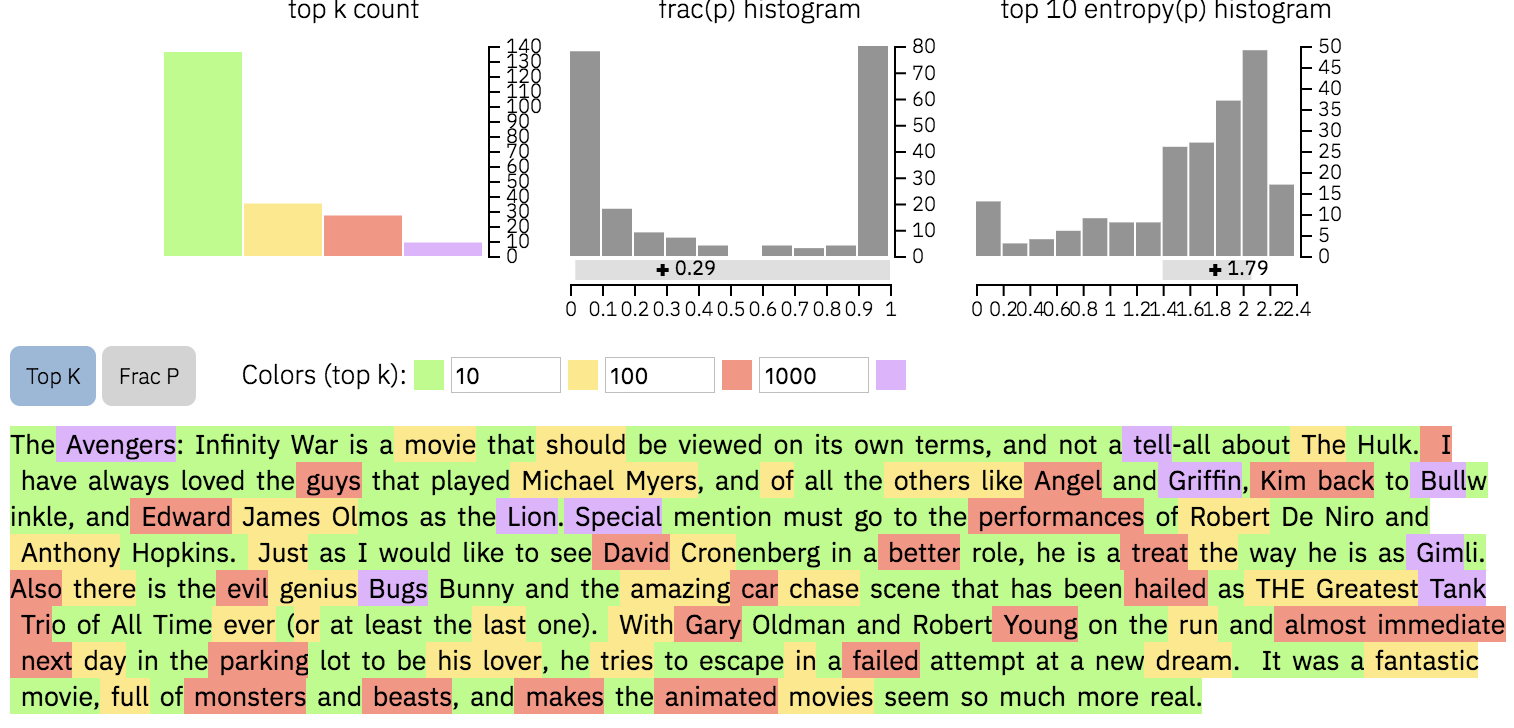

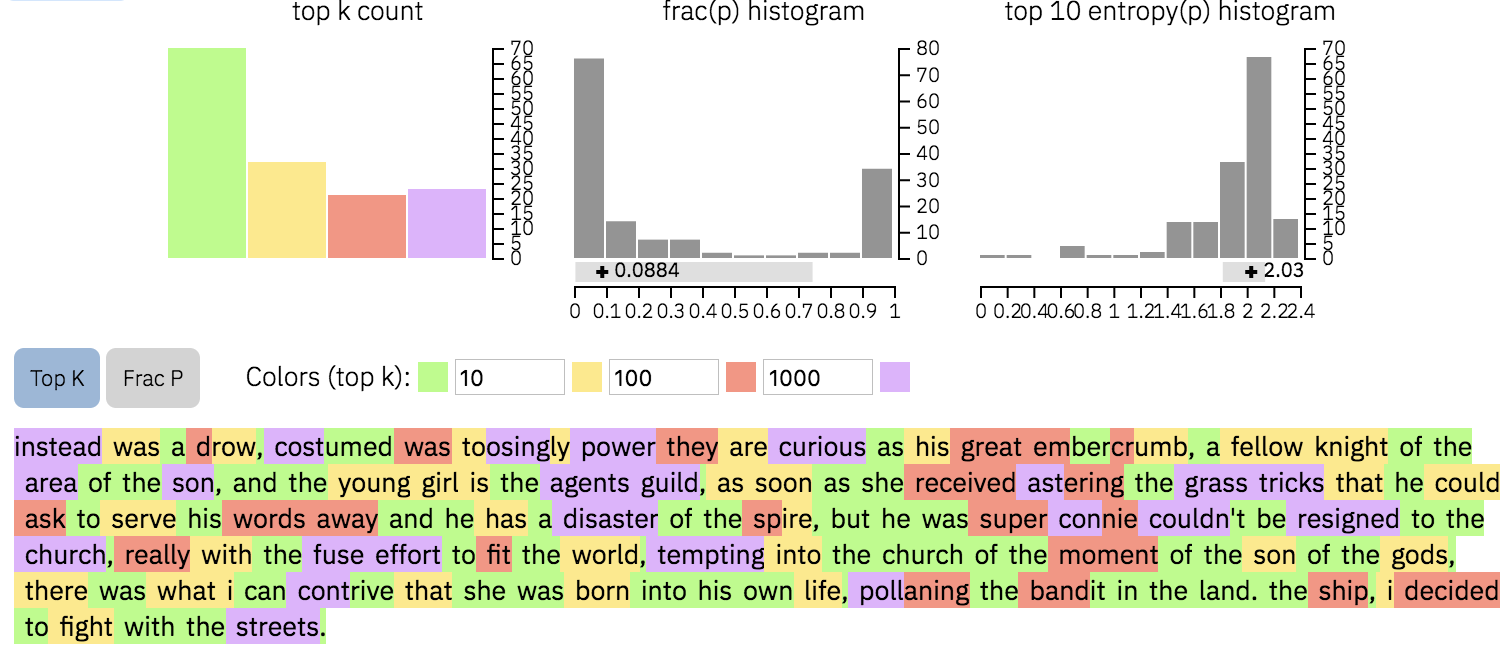

However, here’s what it did with a review of Avengers: Infinity War that I generated using an algorithm Facebook trained on Amazon reviews. It’s not an entirely plausible review, but to GLTR it looks a lot more like the human-written text than the AI-generated text. Plenty of human-written text scores in this range.

And here’s how GLTR rated another Amazon review by that same algorithm. A human might find this review to be a bit suspect, but, again, the AI didn’t score this as bot-written text.

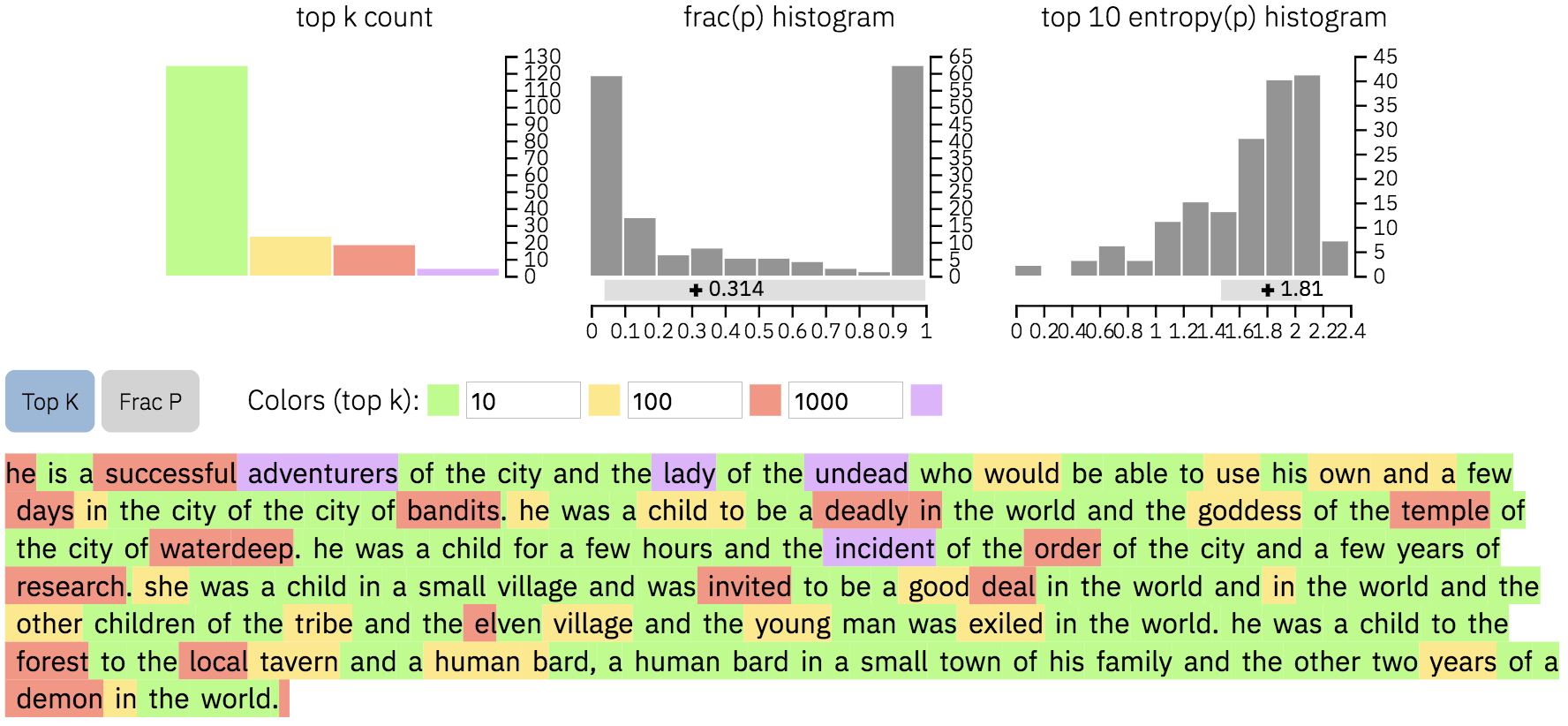

What about an AI that’s really, really bad at generating text? How does that rate? Here’s some output from a neural net I trained to generate Dungeons and Dragons biographies. Whatever GLTR was expecting, it wasn’t fuse efforts and grass tricks.

But I generated that biography with the creativity setting turned up high, so my algorithm was TRYING to be unpredictable. What if I turned the D&D bio generator’s creativity setting very low, so it tries to be predictable instead? Would that make it easier for GLTR to detect? Only slightly. It still looks like unpredictable human-written text to GLTR.

GLTR is still pretty good at detecting text that GPT-2 generates - after all, it’s using GPT-2 itself to do the predictions. So, it’ll be a useful defense against GPT-2 generated spam.

But, if you want to build an AI that can sneak its text past a GPT-2 based detector, try building one that generates laughably incoherent text. Apparently, to GPT-2, that sounds all too human.

For more laughably incoherent text, I trained a neural net on the complete text of Black Beauty, and generated a long rambling paragraph about being a Good Horse. To read it, and GLTR’s verdict, become an AI Weirdness supporter to get it as bonus content.