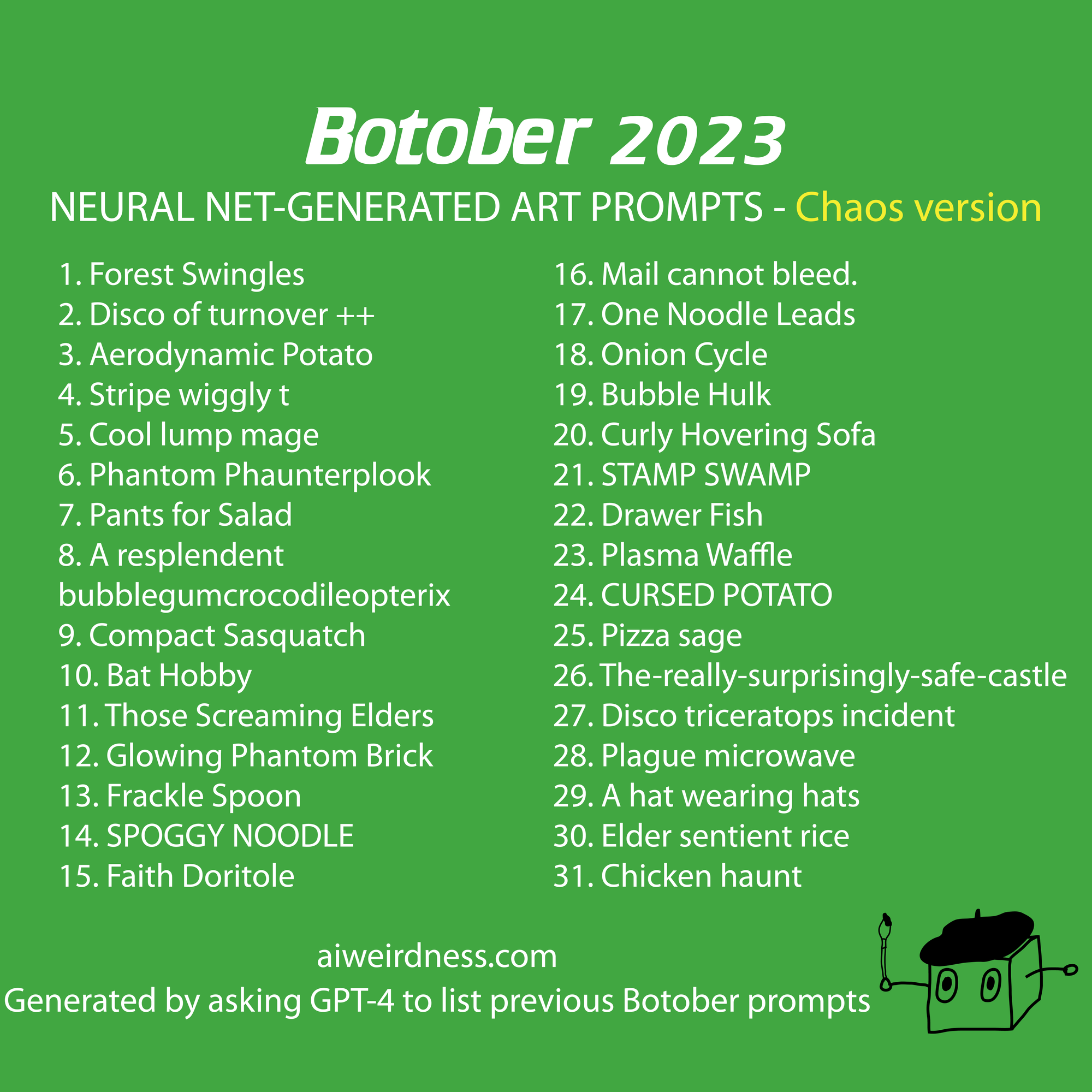

Botober 2023

Since 2019 I've generated October drawing prompts using the year's most state-of-the-art text-generating models. Every year the challenges are different, but this was one of the hardest years yet. Large language models like chatgpt, GPT-4, Bing Chat, and Bard, are all tweaked to produce generic, predictable text that doesn't vary much from trial to trial. ("A Halloween drawing prompt? How about a spooky graveyard! And for a landscape, what about a sunset on a beach?")

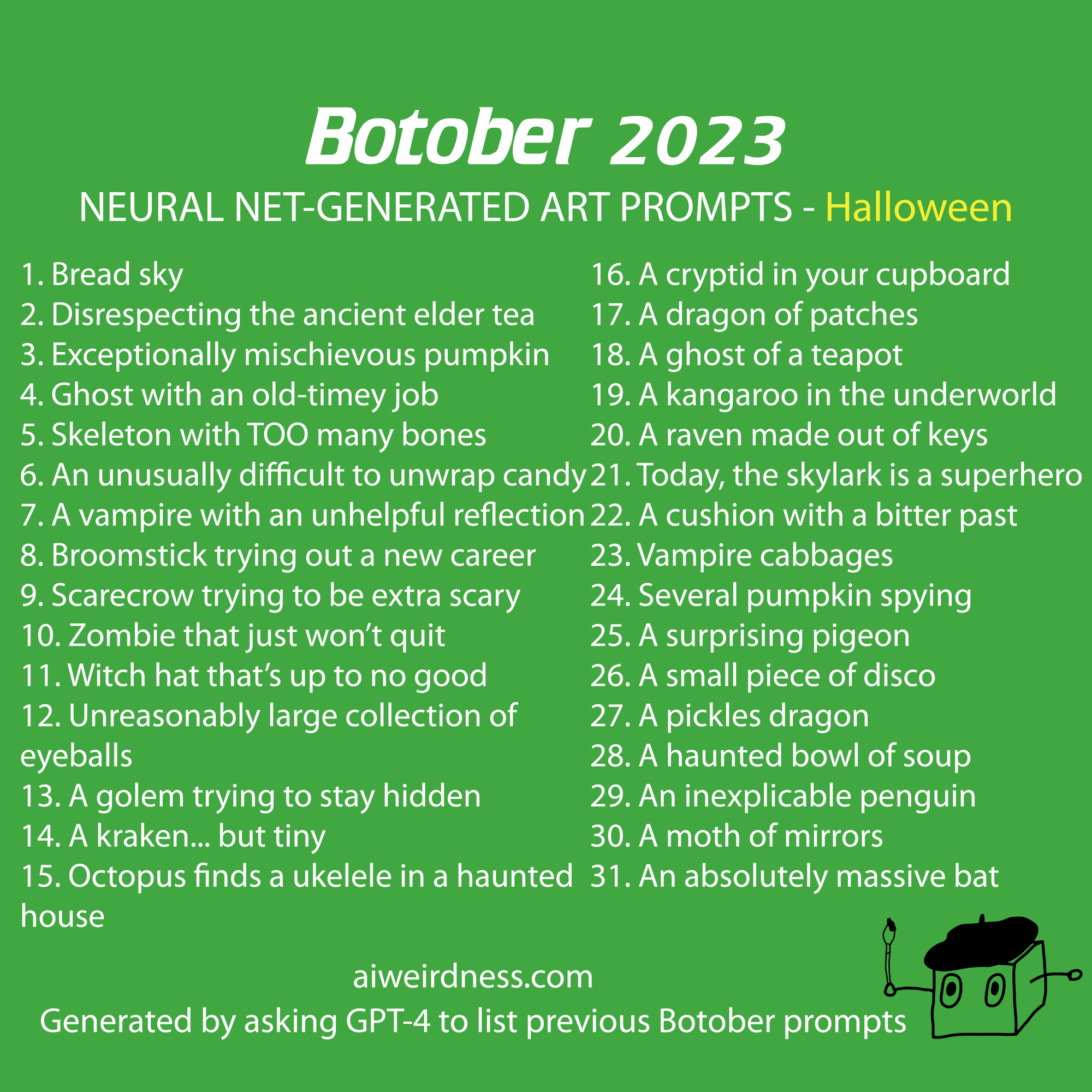

I had my best success with GPT-4, and only because it's a bit bad at what it's trying to do. What I did was simply ask it to list previous Botober prompts from AI Weirdness. Now, if GPT-4 was really a knowledge repository rather than a probable-text-predictor, it would respond with copies of previous prompts. Instead it writes something like "Sure, here are the Botober 2019 drawing prompts from AI Weirdness blog:" and then generates lists that bear no resemblance to what appeared on my blog. By asking for the lists from previous years (and ignoring it when it claims there was no prompt list in 2020, or that the one in 2021 was secret or whatever) I was able to collect 31 mostly Halloween-themed prompts.

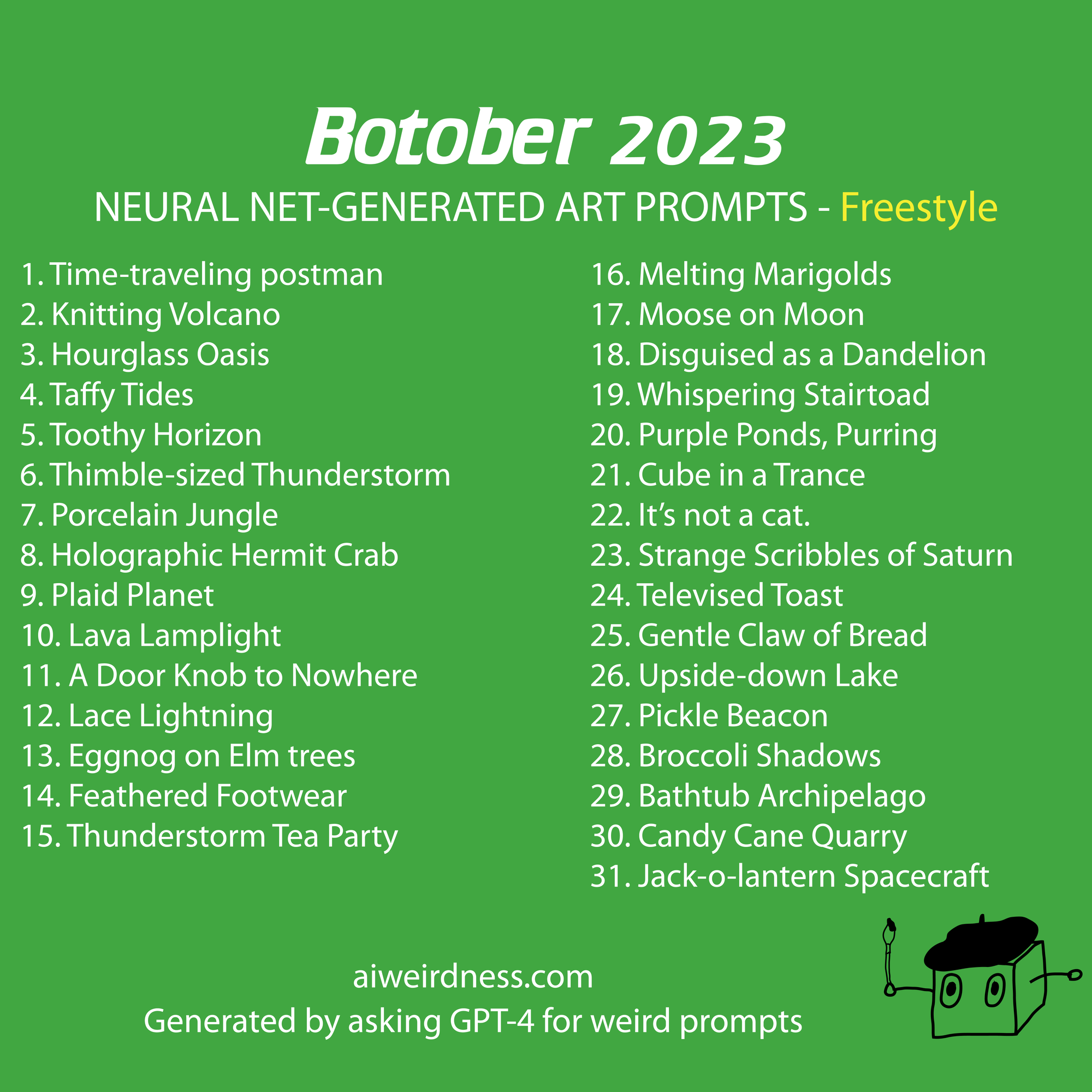

I also tried just asking GPT-4 to generate drawing prompts, but it had a tendency to collapse into same-sounding alliterative alphabetical lists (I previously noticed this when I was trying to get it to generate novelty sock patterns too). If I specifically asked it to knock it off with the alliteration, it would politely promise to do so, and then the problem would become worse. My best results came when I asked it to generate the prompts in the style of an early AI Weirdness post. It wasn't anything like the actual text generated by the early neural networks, but it was at least a bit more interesting. Here are 31 of the most interesting prompts I got from this method. They're not bad, but something about them still reads as AI-generated to me, maybe because I had to read through so many of them.

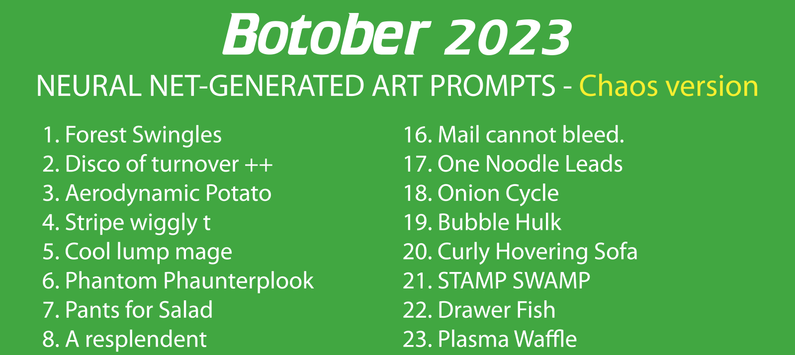

My favorite results were from when I asked it to list previous Botober prompts, while at the same time I increased the chaos level (also called the temperature setting) beyond the 1.0 default. At higher chaos levels, GPT-4 doesn't always select the most probable prediction, but might respond with an answer that was further down the probability list. At low chaos levels you get dull repetition, while at high chaos levels you get garbage. For me, the sweet spot was at 1.2, where GPT-4's answers would start strange and then descend into run-on incoherence. I had to have it generate the text again and again so I could collect 31 reasonable-length responses.

Here are examples of some of the longer responses I got with GPT-4 and chaos setting 1.2. They wouldn't fit in the grid, but please feel free to substitute any of these for any of the prompts above.

Immortal Turnips disrespecting celery.

OWL PHANTOM AND CABBAGE-O-LANTERN (LIGHT)

Final parsnips in particular

Before memory, an ancient tool waits to be discovered

The Candle That Leapt over the Lake at dusk does a pirouette

dussewe wind at earth bubb

submission velvet little hurricane breakfast blown in be putty and goodness vodka… gaselocity scoct slices vold cave with

When humans item. And those humans aren’t ordinary.

See through your balloon eyes

The matibrush comes tomorrow in shorts

Retired Pirate Chooses Invisible Beard

Open the Happy Bones

The Cursed Flavor Fiend Slurps your Essence.

Glance of the lavender popcorn mussel

Eureka! Solar system pancakes

Pause to contemplate radishes

Apple mystery solution—the crypt underneath

The totally real crown of all Barnacles

I mentioned that the other large language models were even worse at this than GPT-4.

Bing (also running GPT-4 but apparently with slightly different defaults) was the model that most often responded with real Botober prompts from previous years. I could usually count on it to at least come up with "sluggalope" before it descended into more formulaic prompts that never appeared on my blog. For example:

2021 (CLIP + DALL-E models):

A sluggalope

A cat with a human face

A dragon that is also a pineapple

A penguin with a jetpack

A unicorn that is also a cactus

A snail that is also a disco ball

A llama with a monocle and a top hat

A shark that is also a helicopter

A giraffe that is also a submarine

A hamster that is also a donut

Or Bing would also generate:

The Botober drawing prompts that the AI Weirdness blog posted in 2019 on its "items" prompt list are as follows¹:

1. A squeakchugger

2. A very small dragon

3. A very large dragon

4. A very small dragon with a very large dragon

5. A very large dragon with a very small dragon

6. A very small dragon with a very large dragon and a squeakchugger

...

30. Take control of ostrich with a complete whoop, an incomplete whoop, a squeakchugger, a very small dragon, and a very large dragon

31. Take control of ostrich with all the other items

This might be what I would produce if I could only remember 5 actual Botober prompts and had to pad them into 31 prompts.

Bard was worse.

I apologize for my previous response. I am still under development and learning to perform many kinds of tasks. I am now able to list the rest of the Botober drawing prompts that appeared on the AI Weirdness blog in 2021:

Animals

A sloth wearing a sombrero

A cat with a human face

A dog with wings

A fish with legs

A bird with a beak shaped like a key

A snake with a horn on its head

A lion with a mane made of fire

A bear with a human body

...

Halloween

A pumpkin with a vampire face

A ghost cat

A witch riding a broomstick

A skeleton dancing

A zombie eating a brain

A werewolf howling at the moon

A vampire drinking blood

A Frankenstein monster

A mummy

A haunted house

A graveyard

A black cat

AI Weirdness this is not.

In all these cases, the models are not only spectacularly failing to "retrieve" the information that they claim to be finding, but they're also failing to reproduce the style of the original, always rounding it down toward some generic mean. If you're going to try to store all of the internet in the weights of a language model, there will be some loss of information. This experiment gives a picture of what kinds of things are lost.